A Beginner’s Guide to Learning PySpark for Big Data Processing

ProjectPro

JUNE 6, 2025

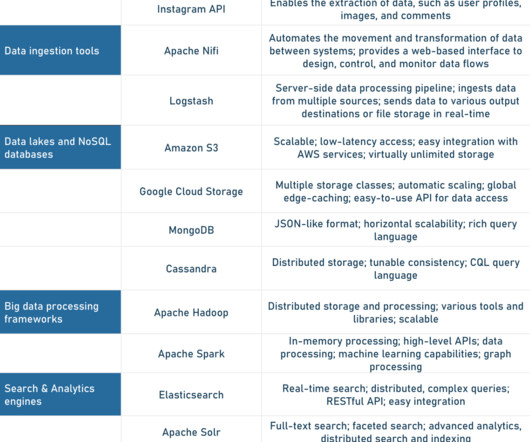

When it comes to data ingestion pipelines, PySpark has a lot of advantages. PySpark allows you to process data from Hadoop HDFS , AWS S3, and various other file systems. This allows for faster data processing since undesirable data is cleansed using the filter operation in a Data Frame.

Let's personalize your content