How to Transition from ETL Developer to Data Engineer?

ProjectPro

JUNE 6, 2025

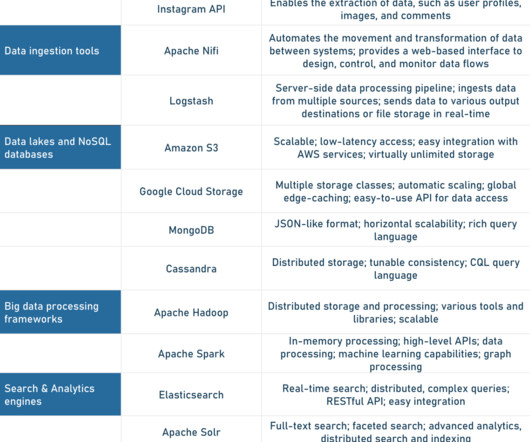

These formats are data models and serve as the foundation for an ETL developer's definition of the tools necessary for data transformation. An ETL developer should be familiar with SQL/NoSQL databases and data mapping to understand data storage requirements and design warehouse layout.

Let's personalize your content