Data Validation Testing: Techniques, Examples, & Tools

Monte Carlo

AUGUST 8, 2023

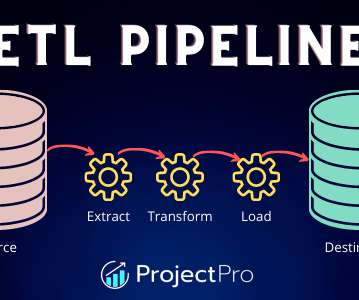

The Definitive Guide to Data Validation Testing Data validation testing ensures your data maintains its quality and integrity as it is transformed and moved from its source to its target destination. It’s also important to understand the limitations of data validation testing.

Let's personalize your content