Simplifying Continuous Data Processing Using Stream Native Storage In Pravega with Tom Kaitchuck - Episode 63

Data Engineering Podcast

DECEMBER 31, 2018

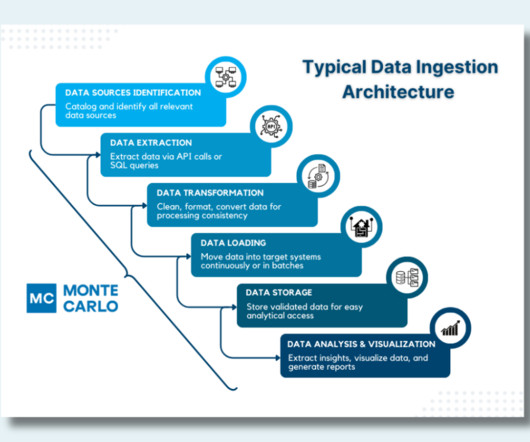

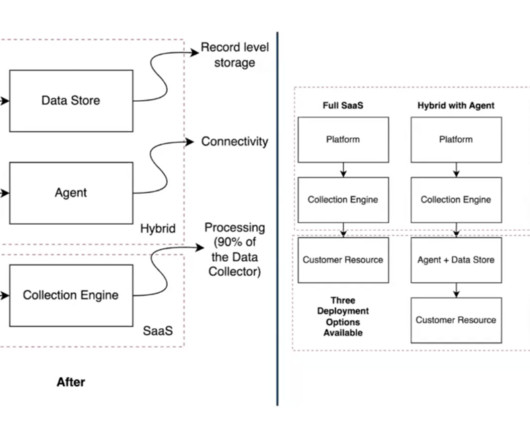

Summary As more companies and organizations are working to gain a real-time view of their business, they are increasingly turning to stream processing technologies to fullfill that need. However, the storage requirements for continuous, unbounded streams of data are markedly different than that of batch oriented workloads.

Let's personalize your content