Complete Guide to Data Transformation: Basics to Advanced

Ascend.io

OCTOBER 28, 2024

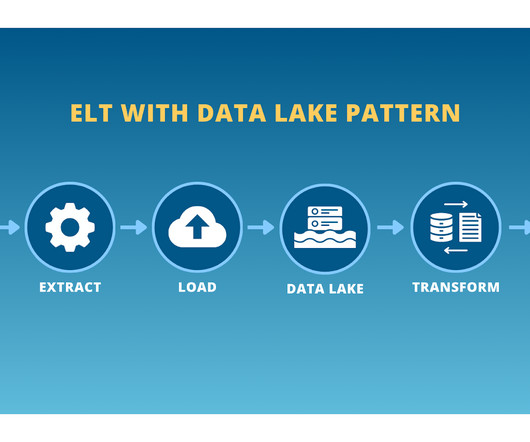

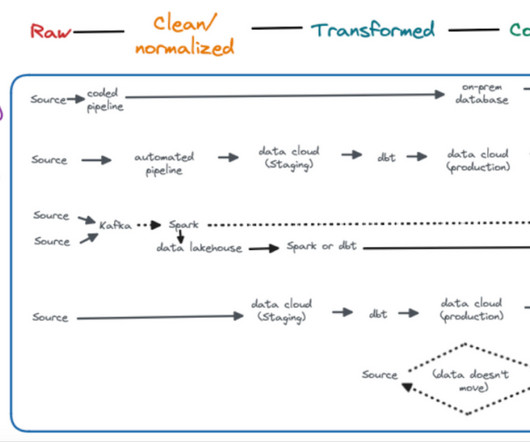

What is Data Transformation? Data transformation is the process of converting raw data into a usable format to generate insights. It involves cleaning, normalizing, validating, and enriching data, ensuring that it is consistent and ready for analysis.

Let's personalize your content