How to Become Databricks Certified Apache Spark Developer?

ProjectPro

FEBRUARY 21, 2023

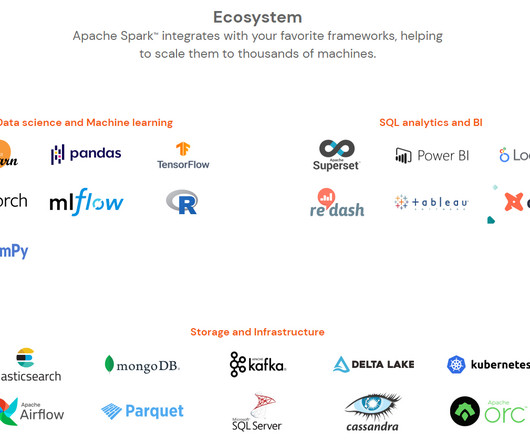

Apache Spark is the most efficient, scalable, and widely used in-memory data computation tool capable of performing batch-mode, real-time, and analytics operations. The next evolutionary shift in the data processing environment will be brought about by Spark due to its exceptional batch and streaming capabilities.

Let's personalize your content