Top 16 Data Science Job Roles To Pursue in 2024

Knowledge Hut

DECEMBER 26, 2023

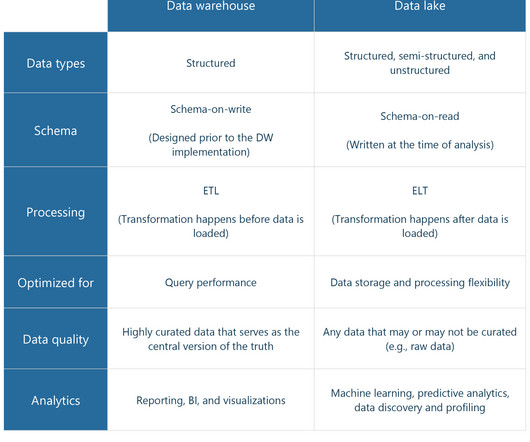

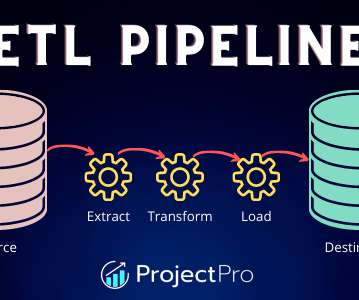

According to the World Economic Forum, the amount of data generated per day will reach 463 exabytes (1 exabyte = 10 9 gigabytes) globally by the year 2025. They use technologies like Storm or Spark, HDFS, MapReduce, Query Tools like Pig, Hive, and Impala, and NoSQL Databases like MongoDB, Cassandra, and HBase.

Let's personalize your content