Implementing the Netflix Media Database

Netflix Tech

DECEMBER 14, 2018

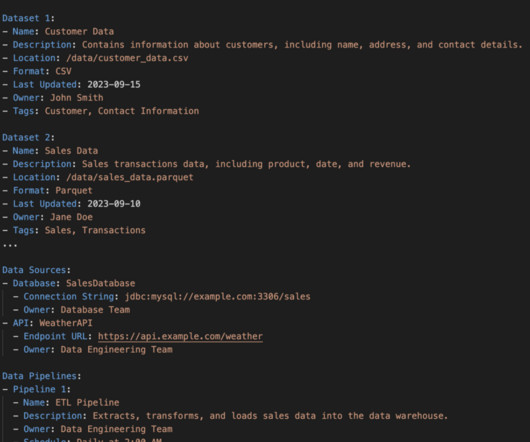

In the previous blog posts in this series, we introduced the N etflix M edia D ata B ase ( NMDB ) and its salient “Media Document” data model. A fundamental requirement for any lasting data system is that it should scale along with the growth of the business applications it wishes to serve.

Let's personalize your content