Pydantic Tutorial: Data Validation in Python Made Simple

KDnuggets

MARCH 25, 2024

Learn how to use Pydantic, a popular data validation library, to model and validate your data. Want to write more robust Python applications?

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Data Validation Related Topics

Data Validation Related Topics

KDnuggets

MARCH 25, 2024

Learn how to use Pydantic, a popular data validation library, to model and validate your data. Want to write more robust Python applications?

Towards Data Science

APRIL 30, 2024

Discussing the basic principles and methodology of data validation Continue reading on Towards Data Science »

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Agent Tooling: Connecting AI to Your Tools, Systems & Data

How to Modernize Manufacturing Without Losing Control

Mastering Apache Airflow® 3.0: What’s New (and What’s Next) for Data Orchestration

Precisely

SEPTEMBER 25, 2023

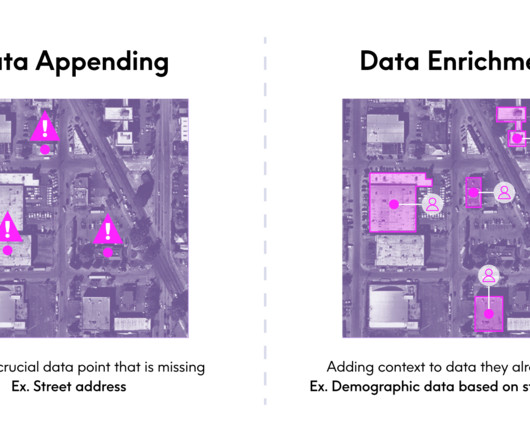

An important part of this journey is the data validation and enrichment process. Defining Data Validation and Enrichment Processes Before we explore the benefits of data validation and enrichment and how these processes support the data you need for powerful decision-making, let’s define each term.

KDnuggets

AUGUST 29, 2023

New features and concepts.

Monte Carlo

AUGUST 8, 2023

The Definitive Guide to Data Validation Testing Data validation testing ensures your data maintains its quality and integrity as it is transformed and moved from its source to its target destination. It’s also important to understand the limitations of data validation testing.

Precisely

JULY 24, 2023

When an organization fails to standardize and verify address information, enriching the data with reliable, trustworthy external information is difficult. To Deliver Standout Results, Start by Improving Data Integrity Critical business outcomes depend heavily on the quality of an organization’s data.

Monte Carlo

MARCH 24, 2023

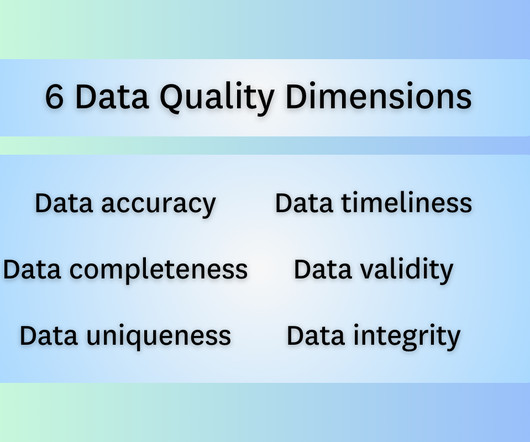

The data doesn’t accurately represent the real heights of the animals, so it lacks validity. Let’s dive deeper into these two crucial concepts, both essential for maintaining high-quality data. Let’s dive deeper into these two crucial concepts, both essential for maintaining high-quality data. What Is Data Validity?

Monte Carlo

FEBRUARY 22, 2023

The annoying red notices you get when you sign up for something online saying things like “your password must contain at least one letter, one number, and one special character” are examples of data validity rules in action. It covers not just data validity, but many more data quality dimensions, too.

Data Engineering Weekly

MAY 27, 2023

The top 75% percentile jobs in Amsterdam, London, and Dublin pay nearly 50% more than those in Berlin [link] Trivago: Implementing Data Validation with Great Expectations in Hybrid Environments The article by Trivago discusses the integration of data validation with Great Expectations.

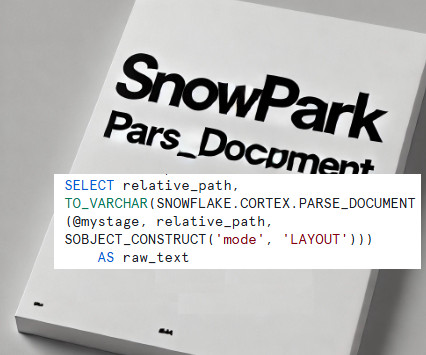

Snowflake

JANUARY 30, 2025

Snowflake partner Accenture, for example, demonstrated how insurance claims professionals can leverage AI to process unstructured data including government IDs and reports to make document gathering, data validation, claims validation and claims letter generation more streamlined and efficient.

Cloudyard

APRIL 22, 2025

This is where “Snowpark Magic: Auto-Validate Your S3 to Snowflake Data Loads”comes into play a powerful approach to automate row-level validation between staged source files and their corresponding Snowflake tables, ensuring trust and integrity across your data pipelines.

Precisely

JANUARY 15, 2024

When you delve into the intricacies of data quality, however, these two important pieces of the puzzle are distinctly different. Knowing the distinction can help you to better understand the bigger picture of data quality. What Is Data Validation? Read What Is Data Verification, and How Does It Differ from Validation?

RudderStack

MAY 18, 2021

In this post, you will know about common challenges to data validation and how RudderStack can break them down & make it a smooth step in your workflow

The Pragmatic Engineer

OCTOBER 17, 2024

Web frontend: Angular 17 with server-side rendering support (SSR).

ArcGIS

MARCH 10, 2025

This is the third installment in a series of blog articles focused on using ArcGIS Notebooks to map gentrification in US cities.

Acceldata

DECEMBER 5, 2022

ValidationLearn how a data observability solution can automatically clean and validate incoming data pipelines in real-time.

Christophe Blefari

MARCH 15, 2024

Understand how BigQuery inserts, deletes and updates — Once again Vu took time to deep dive into BigQuery internal, this time to explain how data management is done. Pandera, a data validation library for dataframes, now supports Polars.

Data Engineering Weekly

FEBRUARY 2, 2025

Key features include workplan auctioning for resource allocation, in-progress remediation for handling data validation failures, and integration with external Kafka topics, achieving a throughput of 1.2 million entities per second in production.

Ascend.io

OCTOBER 28, 2024

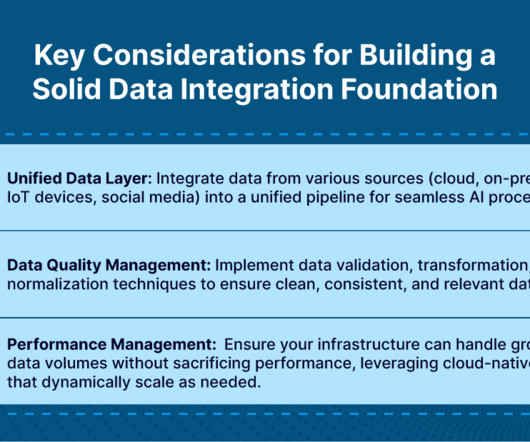

It is important to note that normalization often overlaps with the data cleaning process, as it helps to ensure consistency in data formats, particularly when dealing with different sources or inconsistent units. Data Validation Data validation ensures that the data meets specific criteria before processing.

DataKitchen

DECEMBER 6, 2024

Get the DataOps Advantage: Learn how to apply DataOps to monitor, iterate, and automate quality checkskeeping data quality high without slowing down. Practical Tools to Sprint Ahead: Dive into hands-on tips with open-source tools that supercharge data validation and observability. Want More Detail? Read the popular blog article.

ArcGIS

MARCH 10, 2025

This is the third installment in a series of blog articles focused on using ArcGIS Notebooks to map gentrification in US cities.

Cloudyard

JANUARY 15, 2025

Automate Data Validation : The logic ensures invalid entries, such as missing policy numbers handles gracefully. Parse PDF Output Benefits: Advanced Parsing with Regex : Using regex within Snowpark, we accurately extract key fields like the policy holders name while eliminating irrelevant text.

Towards Data Science

FEBRUARY 6, 2023

Pydantic models expect to receive JSON-like data, so any data we pass to our model for validation must be a dictionary. This really allows a lot of granularity with data validation without writing a ton of code. HOME: str GUILD: str PAY: int = pydantic.Field(.,

Monte Carlo

JULY 30, 2024

In this article, we’ll dive into the six commonly accepted data quality dimensions with examples, how they’re measured, and how they can better equip data teams to manage data quality effectively. Table of Contents What are Data Quality Dimensions? What are the 7 Data Quality Dimensions?

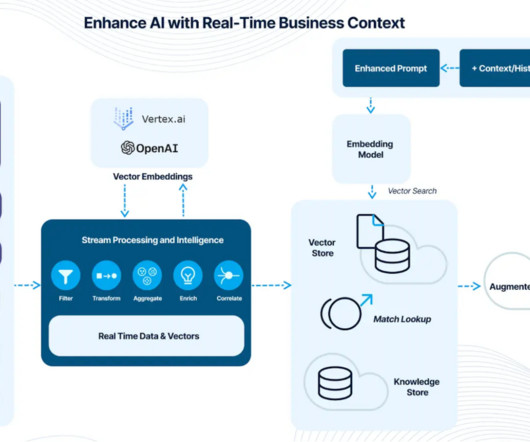

Striim

APRIL 18, 2025

How Striim Enables High-Quality, AI-Ready Data Striim helps organizations solve these challenges by ensuring real-time, clean, and continuously available data for AI and analytics. Process and clean data as it moves so AI and analytics work with trusted, high-quality inputs.

Towards Data Science

JANUARY 7, 2024

If the data changes over time, you might end up with results you didn’t expect, which is not good. To avoid this, we often use data profiling and data validation techniques. Data profiling gives us statistics about different columns in our dataset. It lets you log all sorts of data. So let’s dive in!

Precisely

NOVEMBER 18, 2024

So, you should leverage them to dynamically generate data validation rules rather than relying on static, manually set rules. Leverage AI to enhance governance. Large language models are excellent at inferring hidden relationships and context,” says Anandarajan. Focus on metadata management.

Striim

JANUARY 17, 2025

To achieve accurate and reliable results, businesses need to ensure their data is clean, consistent, and relevant. This proves especially difficult when dealing with large volumes of high-velocity data from various sources.

Edureka

APRIL 22, 2025

Data Quality and Governance In 2025, there will also be more attention paid to data quality and control. Companies now know that bad data quality leads to bad analytics and, ultimately, bad business strategies. Companies all over the world will keep checking that they are following global data security rules like GDPR.

Wayne Yaddow

MARCH 28, 2025

In an AI LLM pipeline, standardization improves data interoperability and streamlines later analytical steps, which directly improves model correctness and interpretability. Third: The data integration process should include stringent data validation and reconciliation protocols.

Databand.ai

MAY 30, 2023

Here are several reasons data quality is critical for organizations: Informed decision making: Low-quality data can result in incomplete or incorrect information, which negatively affects an organization’s decision-making process. Introducing checks like format validation (e.g.,

Tweag

MAY 16, 2023

To minimize the risk of misconfigurations, Nickel features (opt-in) static typing and contracts, a powerful and extensible data validation framework. For configuration data, we tend to use contracts. Contracts are a principled way of writing and applying runtime data validators.

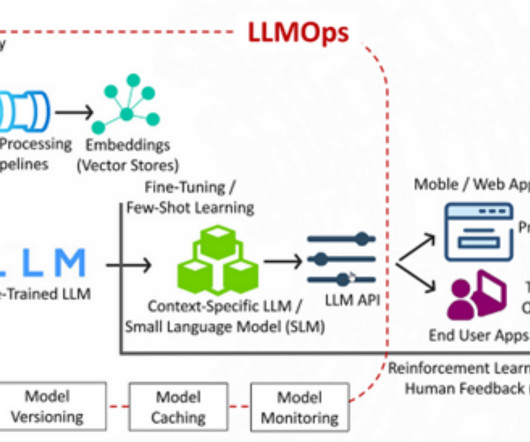

Data Engineering Podcast

MAY 26, 2024

Data center migration: Physical relocation or consolidation of data centers Virtualization migration: Moving from physical servers to virtual machines (or vice versa) Section 3: Technical Decisions Driving Data Migrations End-of-life support: Forced migration when older software or hardware is sunsetted Security and compliance: Adopting new platforms (..)

Monte Carlo

NOVEMBER 14, 2024

Bad data can infiltrate at any point in the data lifecycle, so this end-to-end monitoring helps ensure there are no coverage gaps and even accelerates incident resolution. Data and data pipelines are constantly evolving and so data quality monitoring must as well,” said Lior.

Precisely

DECEMBER 13, 2023

Read our eBook Validation and Enrichment: Harnessing Insights from Raw Data In this ebook, we delve into the crucial data validation and enrichment process, uncovering the challenges organizations face and presenting solutions to simplify and enhance these processes.

Ascend.io

NOVEMBER 14, 2024

Tips for Automating Tasks: Identify Repetitive Tasks: Identify processes that are repetitive and prone to human error, such as data validation, and automate them. Use Workflow Orchestration Tools: Implement pipeline orchestration tools to automate the entire data pipeline, reducing manual touch points and increasing consistency.

Towards Data Science

MAY 11, 2023

The schema defines which fields are required and the data types of the fields, whereas the data is represented by a generic data structure per Principle #3. def validate(data): assert set(schema["required"]).issubset(set(data.keys())),

Precisely

NOVEMBER 18, 2024

So, you should leverage them to dynamically generate data validation rules rather than relying on static, manually set rules. Leverage AI to enhance governance. Large language models are excellent at inferring hidden relationships and context,” says Anandarajan. Focus on metadata management.

Precisely

APRIL 7, 2025

Transformations: Know if there are changes made to the data upstream (e.g., If you dont know what transformations have been made to the data, Id suggest you not use it. Data validation and verification: Regularly validate both input data and the appended/enriched data to identify and correct inaccuracies before they impact decisions.

Precisely

NOVEMBER 20, 2023

We work with organizations around the globe that have diverse needs but can only achieve their objectives with expertly curated data sets containing thousands of different attributes.

Data Engineering Podcast

JANUARY 26, 2020

__init__ Interview SQLAlchemy PostgreSQL Podcast Episode RedShift BigQuery Spark Cloudera DataBricks Great Expectations Data Docs Great Expectations Data Profiling Apache NiFi Amazon Deequ Tensorflow Data Validation The intro and outro music is from The Hug by The Freak Fandango Orchestra / CC BY-SA Support Data Engineering Podcast

DataKitchen

MAY 14, 2024

Chris will overview data at rest and in use, with Eric returning to demonstrate the practical steps in data testing for both states. Session 3: Mastering Data Testing in Development and Migration During our third session, the focus will shift towards regression and impact assessment in development cycles.

Data Engineering Weekly

APRIL 30, 2023

Watch a panel of data leaders as they discuss how to build strategies for measuring data team ROI. Watch On-demand Trivago: Implementing Data Validation with Great Expectations in Hybrid Environments The article by Trivago discusses the integration of data validation with Great Expectations.

Data Engineering Podcast

SEPTEMBER 25, 2022

What are the ways that reliability is measured for data assets? What are the core abstractions that you identified for simplifying the declaration of data validations? What are the ways that reliability is measured for data assets? what is the equivalent to site uptime?) what is the equivalent to site uptime?)

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content