Data Engineering Weekly #206

Data Engineering Weekly

FEBRUARY 2, 2025

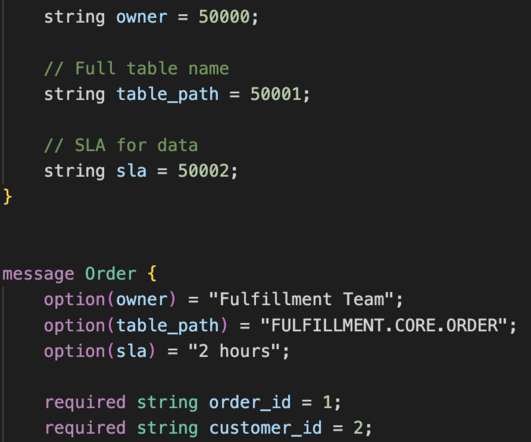

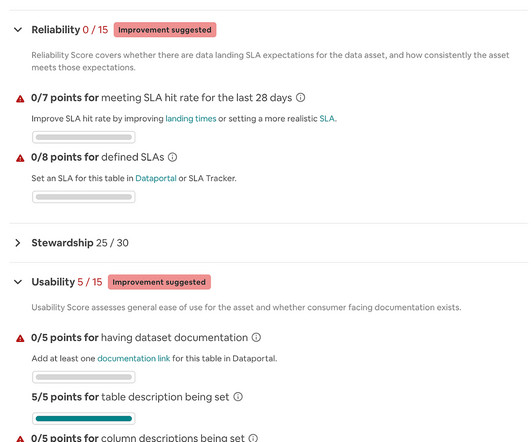

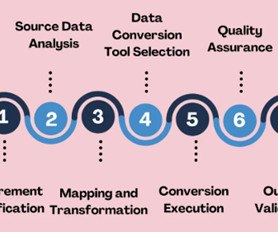

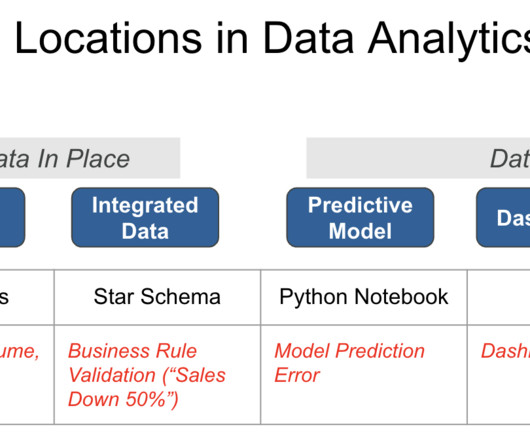

Shifting left involves moving data processing upstream, closer to the source, enabling broader access to high-quality data through well-defined data products and contracts, thus reducing duplication, enhancing data integrity, and bridging the gap between operational and analytical data domains.

Let's personalize your content