Azure Data Engineer Certification Path (DP-203): 2023 Roadmap

Knowledge Hut

SEPTEMBER 26, 2023

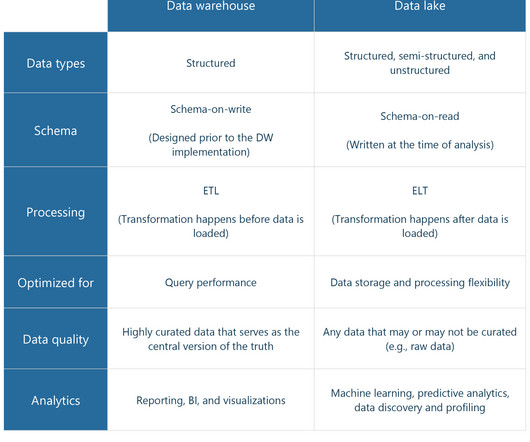

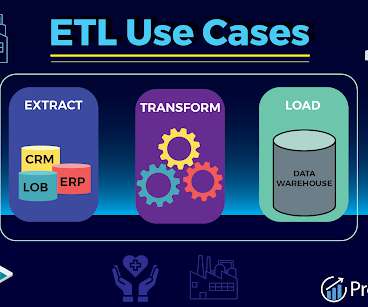

We as Azure Data Engineers should have extensive knowledge of data modelling and ETL (extract, transform, load) procedures in addition to extensive expertise in creating and managing data pipelines, data lakes, and data warehouses. ETL activities are also the responsibility of data engineers.

Let's personalize your content