Zenlytic Is Building You A Better Coworker With AI Agents

Data Engineering Podcast

MAY 18, 2024

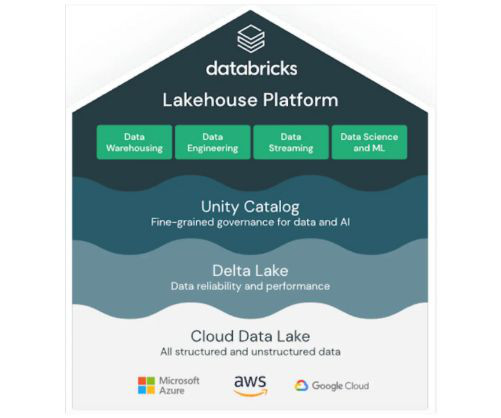

Data lakes are notoriously complex. For data engineers who battle to build and scale high quality data workflows on the data lake, Starburst is an end-to-end data lakehouse platform built on Trino, the query engine Apache Iceberg was designed for, with complete support for all table formats including Apache Iceberg, Hive, and Delta Lake.

Let's personalize your content