5 Free Courses to Master Data Engineering

KDnuggets

NOVEMBER 30, 2023

Data engineers must prepare and manage the infrastructure and tools necessary for the whole data workflow in a data-driven company.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

KDnuggets

NOVEMBER 30, 2023

Data engineers must prepare and manage the infrastructure and tools necessary for the whole data workflow in a data-driven company.

Data Engineering Podcast

FEBRUARY 18, 2024

Summary A data lakehouse is intended to combine the benefits of data lakes (cost effective, scalable storage and compute) and data warehouses (user friendly SQL interface). Data lakes are notoriously complex. Join in with the event for the global data community, Data Council Austin.

Data Engineering Podcast

MAY 18, 2024

Summary The purpose of business intelligence systems is to allow anyone in the business to access and decode data to help them make informed decisions. Unfortunately this often turns into an exercise in frustration for everyone involved due to complex workflows and hard-to-understand dashboards. Data lakes are notoriously complex.

KDnuggets

DECEMBER 6, 2023

This week on KDnuggets: Discover GitHub repositories from machine learning courses, bootcamps, books, tools, interview questions, cheat sheets, MLOps platforms, and more to master ML and secure your dream job • Data engineers must prepare and manage the infrastructure and tools necessary for the whole data workflow in a data-driven company • And much, (..)

Snowflake

APRIL 17, 2024

In today’s data-driven world, developer productivity is essential for organizations to build effective and reliable products, accelerate time to value, and fuel ongoing innovation. This allows your applications to handle large data sets and complex workflows efficiently.

ThoughtSpot

SEPTEMBER 6, 2023

Those coveted insights live at the end of a process lovingly known as the data pipeline. The pathway from ETL to actionable analytics can often feel disconnected and cumbersome, leading to frustration for data teams and long wait times for business users. Keep reading to see how it works. What is a SpotApp?

Data Engineering Podcast

APRIL 7, 2024

Summary Maintaining a single source of truth for your data is the biggest challenge in data engineering. Different roles and tasks in the business need their own ways to access and analyze the data in the organization. Dagster offers a new approach to building and running data platforms and data pipelines.

Data Engineering Podcast

JANUARY 30, 2022

Summary Pandas is a powerful tool for cleaning, transforming, manipulating, or enriching data, among many other potential uses. As a result it has become a standard tool for data engineers for a wide range of applications. The only thing worse than having bad data is not knowing that you have it.

Data Engineering Podcast

DECEMBER 24, 2023

Summary Kafka has become a ubiquitous technology, offering a simple method for coordinating events and data across different systems. Announcements Hello and welcome to the Data Engineering Podcast, the show about modern data management Introducing RudderStack Profiles. Data lakes are notoriously complex.

Data Engineering Podcast

FEBRUARY 4, 2024

In this episode Yingjun Wu explains how it is architected to power analytical workflows on continuous data flows, and the challenges of making it responsive and scalable. Announcements Hello and welcome to the Data Engineering Podcast, the show about modern data management Data lakes are notoriously complex.

Data Engineering Podcast

SEPTEMBER 17, 2023

Summary A significant amount of time in data engineering is dedicated to building connections and semantic meaning around pieces of information. Linked data technologies provide a means of tightly coupling metadata with raw information. Go to dataengineeringpodcast.com/materialize today to get 2 weeks free!

Data Engineering Weekly

NOVEMBER 24, 2024

Editor’s Note: Launching Data & Gen-AI courses in 2025 I can’t believe DEW will reach almost its 200th edition soon. What I started as a fun hobby has become one of the top-rated newsletters in the data engineering industry. We are planning many exciting product lines to trial and launch in 2025.

Data Engineering Podcast

MARCH 17, 2024

Summary A significant portion of data workflows involve storing and processing information in database engines. In this episode Gleb Mezhanskiy, founder and CEO of Datafold, discusses the different error conditions and solutions that you need to know about to ensure the accuracy of your data. Your first 30 days are free!

Data Engineering Podcast

MARCH 24, 2024

Summary A core differentiator of Dagster in the ecosystem of data orchestration is their focus on software defined assets as a means of building declarative workflows. Data lakes are notoriously complex. Your first 30 days are free! Want to see Starburst in action? What problems are you trying to solve with Dagster+?

Data Engineering Podcast

JUNE 16, 2024

Summary Stripe is a company that relies on data to power their products and business. In this episode Kevin Liu shares some of the interesting features that they have built by combining those technologies, as well as the challenges that they face in supporting the myriad workloads that are thrown at this layer of their data platform.

Data Engineering Podcast

JANUARY 21, 2024

A substantial amount of the data that is being managed in these systems is related to customers and their interactions with an organization. Announcements Hello and welcome to the Data Engineering Podcast, the show about modern data management Data lakes are notoriously complex. Want to see Starburst in action?

Data Engineering Podcast

MAY 12, 2024

Summary Building a data platform is a substrantial engineering endeavor. Announcements Hello and welcome to the Data Engineering Podcast, the show about modern data management This episode is supported by Code Comments, an original podcast from Red Hat. Data lakes are notoriously complex.

Data Engineering Podcast

FEBRUARY 11, 2024

Summary Sharing data is a simple concept, but complicated to implement well. There are also numerous technical considerations to be made, particularly if the producer and consumer of the data aren't using the same platforms. Dagster offers a new approach to building and running data platforms and data pipelines.

Data Engineering Podcast

JUNE 30, 2024

Petr shares his journey from being an engineer to founding Synq, emphasizing the importance of treating data systems with the same rigor as engineering systems. He discusses the challenges and solutions in data reliability, including the need for transparency and ownership in data systems.

Data Engineering Podcast

AUGUST 28, 2022

Summary The dream of every engineer is to automate all of their tasks. For data engineers, this is a monumental undertaking. Orchestration engines are one step in that direction, but they are not a complete solution. Atlan is the metadata hub for your data ecosystem.

Data Engineering Podcast

JUNE 2, 2024

Summary Modern businesses aspire to be data driven, and technologists enjoy working through the challenge of building data systems to support that goal. Data governance is the binding force between these two parts of the organization. What are some of the misconceptions that you encounter about data governance?

Data Engineering Podcast

JANUARY 28, 2024

Summary Monitoring and auditing IT systems for security events requires the ability to quickly analyze massive volumes of unstructured log data. Cliff Crosland co-founded Scanner to provide fast querying of high scale log data for security auditing. SIEM) A query engine is useless without data to analyze.

Data Engineering Podcast

JANUARY 7, 2024

Summary Data processing technologies have dramatically improved in their sophistication and raw throughput. Unfortunately, the volumes of data that are being generated continue to double, requiring further advancements in the platform capabilities to keep up. What are the open questions today in technical scalability of data engines?

Data Engineering Podcast

APRIL 21, 2024

Announcements Hello and welcome to the Data Engineering Podcast, the show about modern data management Dagster offers a new approach to building and running data platforms and data pipelines. Data lakes are notoriously complex. From a product perspective, what are the data challenges that are posed by email?

Data Engineering Podcast

MARCH 10, 2024

Summary Data lakehouse architectures are gaining popularity due to the flexibility and cost effectiveness that they offer. The link that bridges the gap between data lake and warehouse capabilities is the catalog. Data lakes are notoriously complex. Join us at the top event for the global data community, Data Council Austin.

Data Engineering Podcast

APRIL 14, 2024

Announcements Hello and welcome to the Data Engineering Podcast, the show about modern data management This episode is brought to you by Datafold – a testing automation platform for data engineers that prevents data quality issues from entering every part of your data workflow, from migration to dbt deployment.

Data Engineering Podcast

JUNE 23, 2024

Summary Data lakehouse architectures have been gaining significant adoption. Announcements Hello and welcome to the Data Engineering Podcast, the show about modern data management Data lakes are notoriously complex. What are the benefits of embedding Copilot into the data engine?

Data Engineering Podcast

DECEMBER 3, 2023

Summary The first step of data pipelines is to move the data to a place where you can process and prepare it for its eventual purpose. Data transfer systems are a critical component of data enablement, and building them to support large volumes of information is a complex endeavor. Data lakes are notoriously complex.

Data Engineering Podcast

NOVEMBER 26, 2023

Summary Building a data platform that is enjoyable and accessible for all of its end users is a substantial challenge. Announcements Hello and welcome to the Data Engineering Podcast, the show about modern data management Introducing RudderStack Profiles. Data lakes are notoriously complex.

Data Engineering Podcast

DECEMBER 31, 2023

Summary Working with financial data requires a high degree of rigor due to the numerous regulations and the risks involved in security breaches. In this episode Andrey Korchack, CTO of fintech startup Monite, discusses the complexities of designing and implementing a data platform in that sector. Want to see Starburst in action?

Data Engineering Podcast

MAY 26, 2024

When that system is responsible for the data layer the process becomes more challenging. Sriram Panyam has been involved in several projects that required migration of large volumes of data in high traffic environments. Can you start by sharing some of your experiences with data migration projects?

Data Engineering Weekly

NOVEMBER 3, 2024

The challenges around memory, data size, and runtime are exciting to read. Sampling is an obvious strategy for data size, but the layered approach and dynamic inclusion of dependencies are some key techniques I learned with the case study. This count helps to ensure data consistency when deleting and compacting segments.

Data Engineering Podcast

MARCH 31, 2024

Summary Working with data is a complicated process, with numerous chances for something to go wrong. Identifying and accounting for those errors is a critical piece of building trust in the organization that your data is accurate and up to date. Dagster offers a new approach to building and running data platforms and data pipelines.

Data Engineering Podcast

FEBRUARY 25, 2024

Announcements Hello and welcome to the Data Engineering Podcast, the show about modern data management Dagster offers a new approach to building and running data platforms and data pipelines. Data lakes are notoriously complex. Join us at the top event for the global data community, Data Council Austin.

Data Engineering Podcast

MARCH 3, 2024

Colleen Tartow has worked across all stages of the data lifecycle, and in this episode she shares her hard-earned wisdom about how to conduct an AI program for your organization. Data lakes are notoriously complex. Join us at the top event for the global data community, Data Council Austin. Your first 30 days are free!

Data Engineering Podcast

JUNE 9, 2024

Summary Streaming data processing enables new categories of data products and analytics. Unfortunately, reasoning about stream processing engines is complex and lacks sufficient tooling. Data lakes are notoriously complex. How have the requirements of generative AI shifted the demand for streaming data systems?

Data Engineering Podcast

APRIL 28, 2024

In this episode he explains the data collection and preparation process, the collection of model types and sizes that work together to power the experience, and how to incorporate it into your workflow to act as a second brain. Data lakes are notoriously complex. Your first 30 days are free! Want to see Starburst in action?

Christophe Blefari

SEPTEMBER 28, 2023

Make your data stack take-off ( credits ) Hello, another edition of Data News. This week, we're going to take a step back and look at the current state of data platforms. What are the current trends and why are people fighting around the concept of the modern data stack. Is the modern data stack dying?

Data Engineering Podcast

MAY 5, 2024

Announcements Hello and welcome to the Data Engineering Podcast, the show about modern data management Dagster offers a new approach to building and running data platforms and data pipelines. Data lakes are notoriously complex. Your first 30 days are free! Want to see Starburst in action?

Data Engineering Podcast

DECEMBER 18, 2022

Summary One of the reasons that data work is so challenging is because no single person or team owns the entire process. This introduces friction in the process of collecting, processing, and using data. In order to reduce the potential for broken pipelines some teams have started to adopt the idea of data contracts.

Data Engineering Podcast

SEPTEMBER 11, 2022

Summary Any business that wants to understand their operations and customers through data requires some form of pipeline. Building reliable data pipelines is a complex and costly undertaking with many layered requirements. Data stacks are becoming more and more complex. Sifflet also offers a 2-week free trial.

Data Engineering Weekly

SEPTEMBER 29, 2024

Airbnb: Sandcastle - data/AI apps for everyone Product ideas powered by data and AI must go through rapid iteration on shareable, lightweight live prototypes instead of static proposals. link] Grab: Enabling conversational data discovery with LLMs at Grab.

Analytics Vidhya

AUGUST 7, 2024

Introduction Apache Airflow is a crucial component in data orchestration and is known for its capability to handle intricate workflows and automate data pipelines. Many organizations have chosen it due to its flexibility and strong scheduling capabilities.

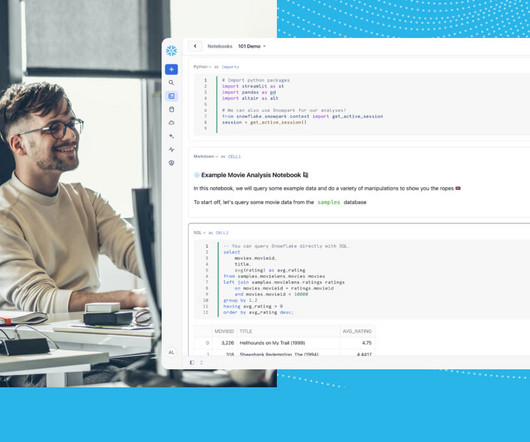

Snowflake

JUNE 6, 2024

You can now use Snowflake Notebooks to simplify the process of connecting to your data and to amplify your data engineering, analytics and machine learning workflows. Notebook usage follows the same consumption-based model as Snowflake’s compute engine. Train and manage your AI/ML models directly in your notebook.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content