Data Engineering Projects

Start Data Engineering

JUNE 14, 2024

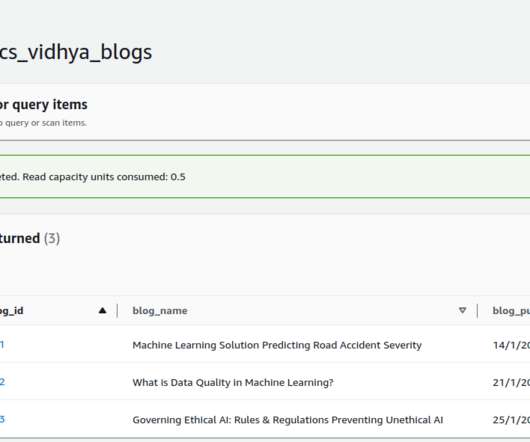

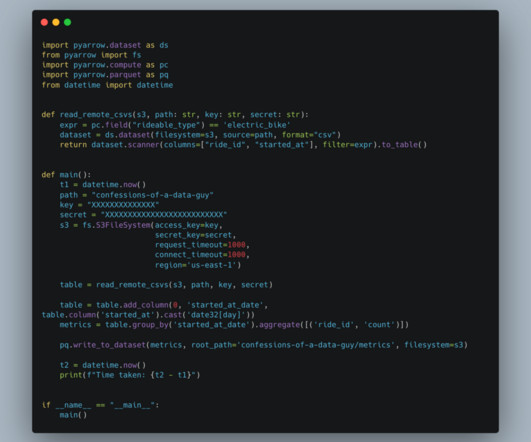

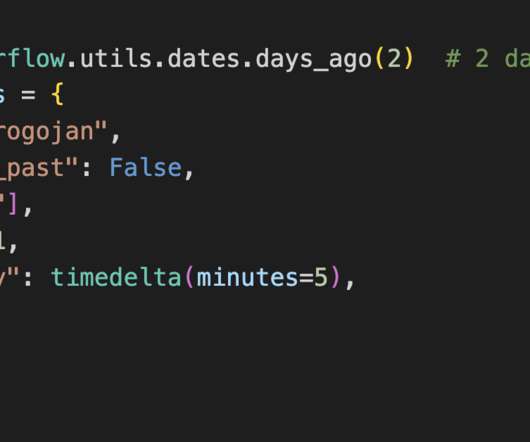

Run Data Pipelines 2.1. Batch pipelines 3.3. Stream pipelines 3.4. Event-driven pipelines 3.5. LLM RAG pipelines 4. Introduction Whether you are new to data engineering or have been in the data field for a few years, one of the most challenging parts of learning new frameworks is setting them up!

Let's personalize your content