A Guide to Data Pipelines (And How to Design One From Scratch)

Striim

SEPTEMBER 11, 2024

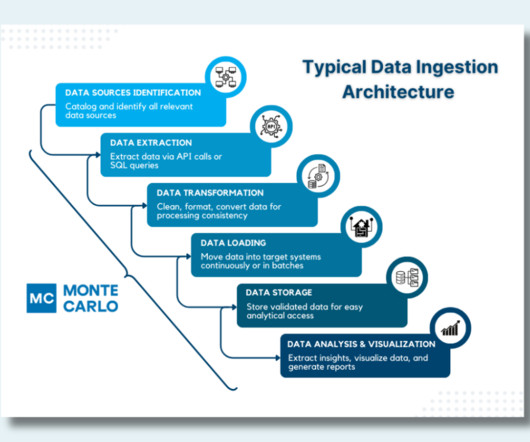

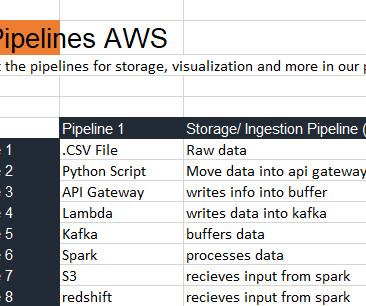

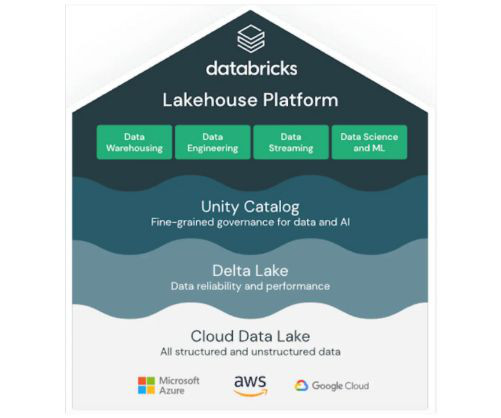

Data pipelines are the backbone of your business’s data architecture. Implementing a robust and scalable pipeline ensures you can effectively manage, analyze, and organize your growing data. That’s where real-time data, and stream processing can help. We’ll answer the question, “What are data pipelines?”

Let's personalize your content