Fueling Data-Driven Decision-Making with Data Validation and Enrichment Processes

Precisely

SEPTEMBER 25, 2023

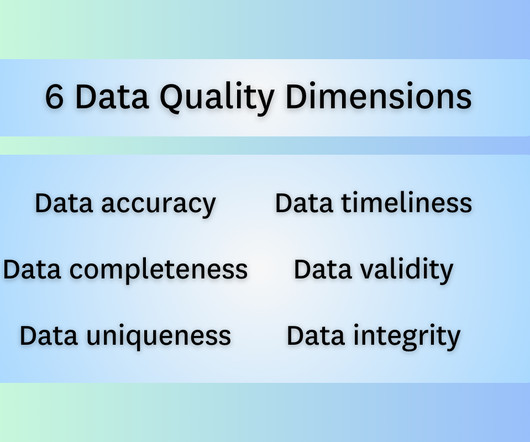

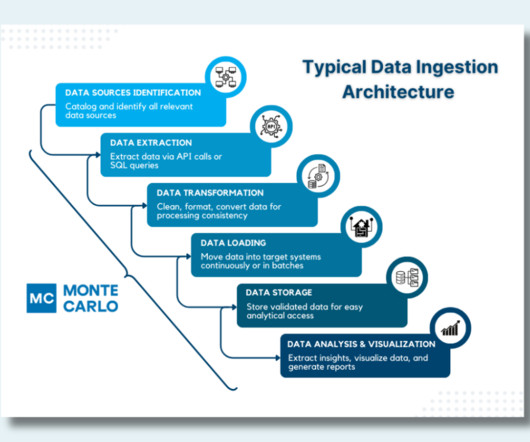

77% of data and analytics professionals say data-driven decision-making is the top goal for their data programs. Data-driven decision-making and initiatives are certainly in demand, but their success hinges on … well, the data that supports them. More specifically, the quality and integrity of that data.

Let's personalize your content