Top 16 Data Science Job Roles To Pursue in 2024

Knowledge Hut

DECEMBER 26, 2023

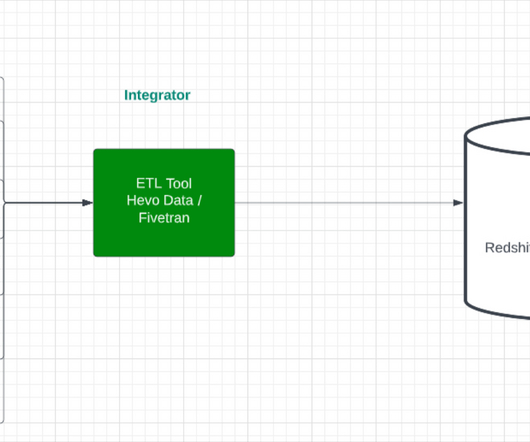

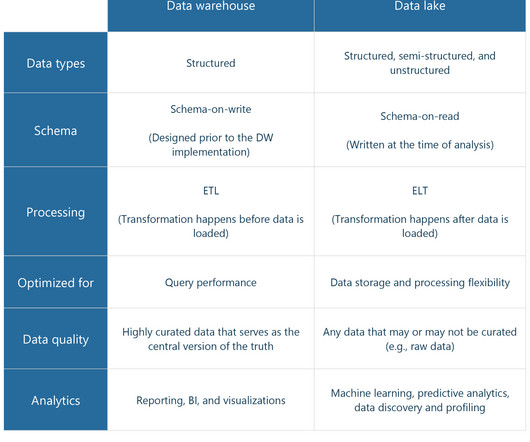

These skills are essential to collect, clean, analyze, process and manage large amounts of data to find trends and patterns in the dataset. The dataset can be either structured or unstructured or both. In this article, we will look at some of the top Data Science job roles that are in demand in 2024.

Let's personalize your content