10 MongoDB Mini Projects Ideas for Beginners with Source Code

ProjectPro

JUNE 6, 2025

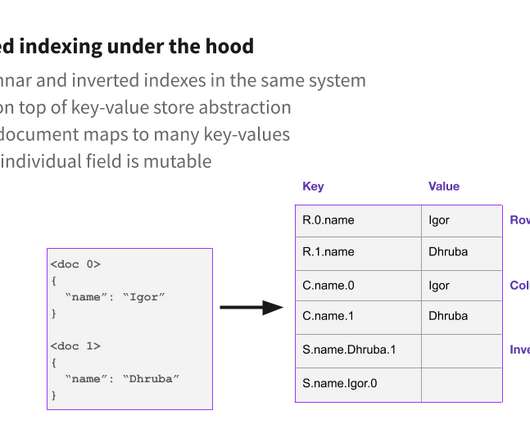

MongoDB Inc offers an amazing database technology that is utilized mainly for storing data in key-value pairs. Such flexibility offered by MongoDB enables developers to utilize it as a user-friendly file-sharing system if and when they wish to share the stored data. Which applications use MongoDB Atlas?

Let's personalize your content