10 AWS Redshift Project Ideas to Build Data Pipelines

ProjectPro

JUNE 6, 2025

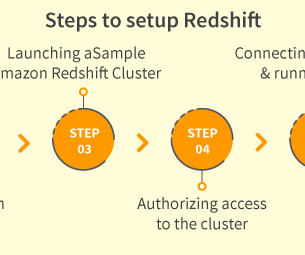

Since Amazon Redshift is based on the industry standard PostgreSQL, several SQL client applications work with minimum changes. You will first need to download Redshift’s ODBC driver from the official AWS website. After downloading and installing the ODBC driver: Set up the DSN connection for Redshift.

Let's personalize your content