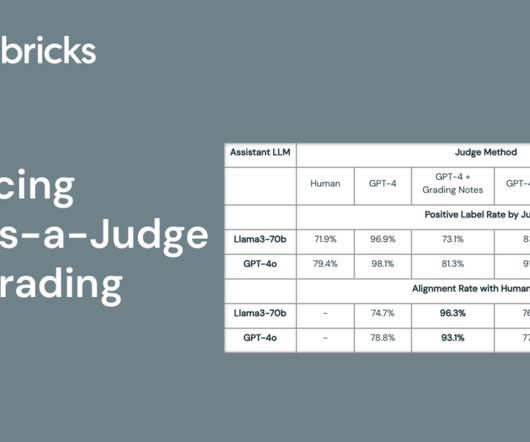

Enhancing LLM-as-a-Judge with Grading Notes

databricks

JULY 22, 2024

Evaluating long-form LLM outputs quickly and accurately is critical for rapid AI development. As a result, many developers wish to deploy LLM-as-judge methods.

databricks

JULY 22, 2024

Evaluating long-form LLM outputs quickly and accurately is critical for rapid AI development. As a result, many developers wish to deploy LLM-as-judge methods.

KDnuggets

JULY 22, 2024

Let's learn to use Pandas pivot_table in Python to perform advance data summarization

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

databricks

JULY 22, 2024

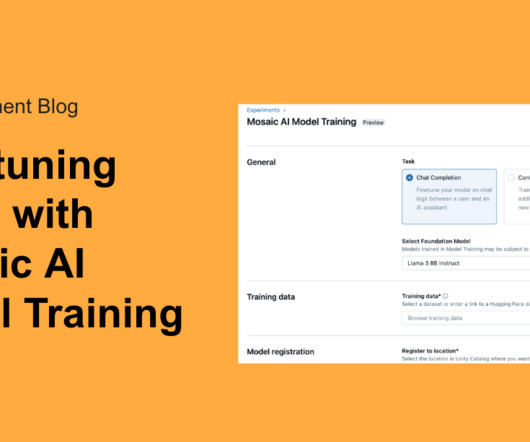

Today, we're thrilled to announce that Mosaic AI Model Training's support for fine-tuning GenAI models is now available in Public Preview. At Databricks.

KDnuggets

JULY 22, 2024

Go from zero to 100 with these free NLP courses!

Advertisement

Apache Airflow® is the open-source standard to manage workflows as code. It is a versatile tool used in companies across the world from agile startups to tech giants to flagship enterprises across all industries. Due to its widespread adoption, Airflow knowledge is paramount to success in the field of data engineering.

databricks

JULY 22, 2024

Written in collaboration with Navin Sharma and Joe Pindell, Stardog Across industries, the impact of post-delivery failure costs (recalls, warranty claims, lost goodwill.

Data Engineering Digest brings together the best content for data engineering professionals from the widest variety of industry thought leaders.

Cloudera

JULY 22, 2024

Late last week, the tech world witnessed a significant disruption caused by a faulty update from CrowdStrike, a cybersecurity software company that focuses on protecting endpoints, cloud workloads, identity, and data. This update led to global IT outages, severely affecting various sectors such as banking, airlines, and healthcare. Many organizations found their systems rendered inoperative, highlighting the critical importance of system resilience and reliability.

Precisely

JULY 22, 2024

Key Takeaways: Use location and data APIs to boost app reliability and user satisfaction with accurate, up-to-date geographic information Overcome common issues like failed deliveries and customer frustrations through advanced geo addressing solutions that enhance efficiency and data accuracy Leverage Precisely APIs to not only correct data but also enrich applications with essential functions including identity verification and points of interest location The demand for accurate and efficient l

Monte Carlo

JULY 22, 2024

Data doesn’t just flow – it floods in at breakneck speed. How do we track this tsunami of changes, ensure data integrity, and extract meaningful insights? Data versioning is the answer. It provides us with a systematic approach to tracking changes, ensuring data integrity, and enabling meaningful insights within today’s fluid and complex data environment.

Cloudyard

JULY 22, 2024

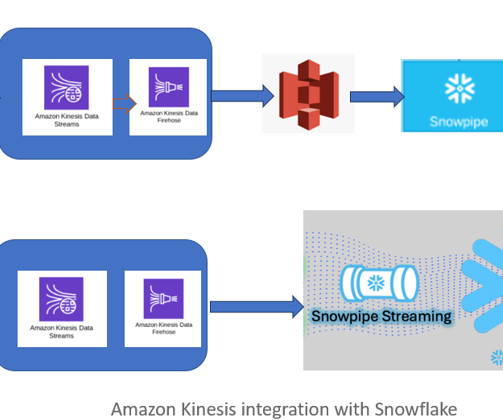

Read Time: 2 Minute, 57 Second Previously, data engineers used Kinesis Firehose to transfer data into blob storage (S3) and then load it into Snowflake using either Snowpipe or batch processing. This introduced latency in the data pipeline for near real-time data processing. Now, Amazon Kinesis Data Firehose (Firehose) offers direct integration with Snowflake Snowpipe Streaming, eliminating the need to store data in an S3 bucket.

Speaker: Tamara Fingerlin, Developer Advocate

In this new webinar, Tamara Fingerlin, Developer Advocate, will walk you through many Airflow best practices and advanced features that can help you make your pipelines more manageable, adaptive, and robust. She'll focus on how to write best-in-class Airflow DAGs using the latest Airflow features like dynamic task mapping and data-driven scheduling!

Knowledge Hut

JULY 22, 2024

As DevOps engineer, your responsibility is to bridge the gaps between software developments, testing, and support. As a DevOps engineer, you will regularly manage, monitor, and optimize an IT projects’ who, what, where, and how. DevOps engineering is not an easy field to work in, but it is a well-paying position with a promising future. To get ready for its interview, you will need expertise with various software applications and experience collaborating with other software engineering dep

Edureka

JULY 22, 2024

Artifacts in DevOps not only help produce the final software but also help the team of developers by storing all the necessary elements in the artifacts repository, where the developers can easily find them and perform necessary operations (add, move, edit, or delete) with them. Thus, the artifacts save the developers valuable time from finding and gathering resources from different places, improving their productivity.

Netflix Tech

JULY 22, 2024

By Jun He , Natallia Dzenisenka , Praneeth Yenugutala , Yingyi Zhang , and Anjali Norwood TL;DR We are thrilled to announce that the Maestro source code is now open to the public! Please visit the Maestro GitHub repository to get started. If you find it useful, please give us a star. What is Maestro Maestro is a general-purpose, horizontally scalable workflow orchestrator designed to manage large-scale workflows such as data pipelines and machine learning model training pipelines.

Snowflake

JULY 22, 2024

A robust, modern data platform is the starting point for your organization’s data and analytics vision. At first, you may use your modern data platform as a single source of truth to realize operational gains — but you can realize far greater benefits by adding additional use cases. In this blog, we offer guidance for leveraging Snowflake’s capabilities around data and AI to build apps and unlock innovation.

Advertisement

With over 30 million monthly downloads, Apache Airflow is the tool of choice for programmatically authoring, scheduling, and monitoring data pipelines. Airflow enables you to define workflows as Python code, allowing for dynamic and scalable pipelines suitable to any use case from ETL/ELT to running ML/AI operations in production. This introductory tutorial provides a crash course for writing and deploying your first Airflow pipeline.

Let's personalize your content