Mind the map: a new design for the London Underground map

ArcGIS

MAY 16, 2024

A modern take on the London tube map with updated accessible colours, a re-classification of lines by type, and line symbols scaled by frequency

ArcGIS

MAY 16, 2024

A modern take on the London tube map with updated accessible colours, a re-classification of lines by type, and line symbols scaled by frequency

KDnuggets

MAY 16, 2024

This guide introduces some key techniques in the feature engineering process and provides practical examples in Python.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

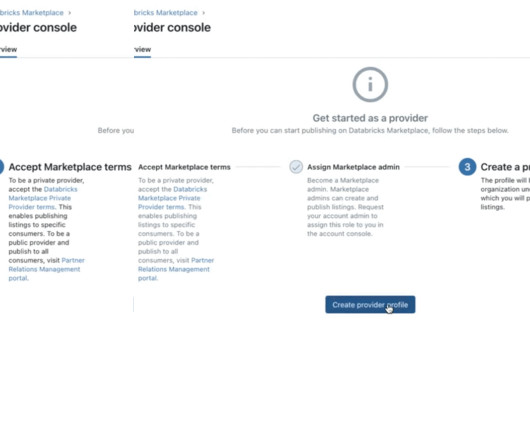

databricks

MAY 16, 2024

We are thrilled to announce an exciting new feature on the Databricks Marketplace that simplifies the process of setting up private exchanges for.

KDnuggets

MAY 16, 2024

Learn how to perform natural sorting in Python using the natsort Python library.

Speaker: Tamara Fingerlin, Developer Advocate

Apache Airflow® 3.0, the most anticipated Airflow release yet, officially launched this April. As the de facto standard for data orchestration, Airflow is trusted by over 77,000 organizations to power everything from advanced analytics to production AI and MLOps. With the 3.0 release, the top-requested features from the community were delivered, including a revamped UI for easier navigation, stronger security, and greater flexibility to run tasks anywhere at any time.

ArcGIS

MAY 16, 2024

This blog post will walk you through the process of running multi resolution deep learning over a range of cell sizes.

KDnuggets

MAY 16, 2024

Join Thomas Miller, for an online information session to learn more about Northwestern online graduate programs in Data Science.

Data Engineering Digest brings together the best content for data engineering professionals from the widest variety of industry thought leaders.

Knowledge Hut

MAY 16, 2024

With the rapid growth of online websites, businesses, and the general ecosystem, it is crucial that website UIs load quickly on smartphones to encourage smartphone-based internet consumption. Facebook developed React Native from a need to generate UI elements efficiently, which formed the basis for creating the open-source web framework. Its native cross-platform capabilities allow usage for a wide range of platforms for application development, including Android, Web, Windows, UWP, tvOS, macOS,

databricks

MAY 16, 2024

In the semiconductor industry, research and development tasks, manufacturing processes, and enterprise planning systems produce an array of data artifacts that can be fused to create an intelligent semiconductor enterprise. Through intelligent data use, an intelligent semiconductor enterprise accelerates time to market, increases manufacturing yield, and enhances product reliability.

Confluent

MAY 16, 2024

Learn how to contribute to open source Apache Kafka by writing Kafka Improvement Proposals (KIPs) that solve problems and add features! Read on for real examples.

Precisely

MAY 16, 2024

Key takeaways: The success of your AI initiatives hinges on the integrity of your data. Ensure your data is accurate, consistent, and contextualized to enable trustworthy AI systems that avoid biases, improve accuracy and reliability, and boost contextual relevance and nuance. Adopt strategic practices in data integration, quality management, governance, spatial analytics, and data enrichment.

Speaker: Alex Salazar, CEO & Co-Founder @ Arcade | Nate Barbettini, Founding Engineer @ Arcade | Tony Karrer, Founder & CTO @ Aggregage

There’s a lot of noise surrounding the ability of AI agents to connect to your tools, systems and data. But building an AI application into a reliable, secure workflow agent isn’t as simple as plugging in an API. As an engineering leader, it can be challenging to make sense of this evolving landscape, but agent tooling provides such high value that it’s critical we figure out how to move forward.

Cloudyard

MAY 16, 2024

Read Time: 1 Minute, 33 Second Snowflake Dynamic Table and Alerts: This use case addresses automating customer accessory purchase monitoring in Snowflake to provide the marketing team with timely insights for personalized promotions. Imagine you’re a Data Engineer/Data Governance developer for an online retail store. You ensure the marketing team receives insights on customer accessory purchases to offer targeted promotions.

Knowledge Hut

MAY 16, 2024

It doesn't matter whether you're working entirely in Node.js or using it as a front-end package management or build tool; npm is an essential part of current web development processes in any language or platform. For a newbie, it may be tough to grasp the fundamental notions of npm as a tool. We spent a lot of time figuring out seemingly little nuances that others would take for granted.

Striim

MAY 16, 2024

The ability to quickly understand and respond to customer demands is critical for staying ahead of the competition. Generative AI (GenAI) is quickly reshaping customer experiences across various sectors. It enables businesses to engage with their clients in real time, providing an unprecedented level of personalization and responsiveness. This innovative approach not only boosts customer satisfaction but also cultivates loyalty and encourages sustained interaction.

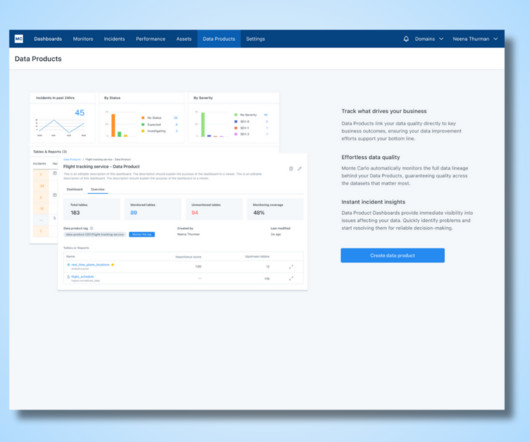

Monte Carlo

MAY 16, 2024

At what level does it make sense to deploy your data monitoring coverage? For most traditional data quality tools the answer has been by table. The typical workflow includes scanning or profiling a table, and then applying a number of suggested checks or rules. One by one by one by… That’s awfully tedious for any environment with thousands, hundreds, or even just dozens of tables.

Speaker: Andrew Skoog, Founder of MachinistX & President of Hexis Representatives

Manufacturing is evolving, and the right technology can empower—not replace—your workforce. Smart automation and AI-driven software are revolutionizing decision-making, optimizing processes, and improving efficiency. But how do you implement these tools with confidence and ensure they complement human expertise rather than override it? Join industry expert Andrew Skoog as he explores how manufacturers can leverage automation to enhance operations, streamline workflows, and make smarter, data-dri

Let's personalize your content