5 Steps to Learn AI for Free in 2024

KDnuggets

MAY 10, 2024

Master AI with these free courses from Harvard, Google, AWS, and more.

KDnuggets

MAY 10, 2024

Master AI with these free courses from Harvard, Google, AWS, and more.

Cloudera

MAY 10, 2024

Apache Iceberg is vital to the work we do and the experience that the Cloudera platform delivers to our customers. Iceberg, a high-performance open-source format for huge analytic tables, delivers the reliability and simplicity of SQL tables to big data while allowing for multiple engines like Spark, Flink, Trino, Presto, Hive, and Impala to work with the same tables, all at the same time.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

KDnuggets

MAY 10, 2024

Get ready for an exciting journey into how AI is changing the tech world!

Knowledge Hut

MAY 10, 2024

As of the beginning of January 2022, India has recognized more than 61,000 startups, thus having the 3rd largest startup ecosystem after the US and China. The government of India has an initiative called Startup India, whose sole purpose is to bring about startup culture and build an ecosystem for entrepreneurship and innovation. As a result, the startup ecosystem in India has emerged as a major growth engine for the country in the past few years and aims to become a global tech powerhouse.

Speaker: Tamara Fingerlin, Developer Advocate

Apache Airflow® 3.0, the most anticipated Airflow release yet, officially launched this April. As the de facto standard for data orchestration, Airflow is trusted by over 77,000 organizations to power everything from advanced analytics to production AI and MLOps. With the 3.0 release, the top-requested features from the community were delivered, including a revamped UI for easier navigation, stronger security, and greater flexibility to run tasks anywhere at any time.

Confluent

MAY 10, 2024

Discover how to build trust in AI by strengthening data and people layers. Learn about risk frameworks, data streaming, and more for effective solutions.

Scott Logic

MAY 10, 2024

In this episode, I’m joined by Jess McEvoy and Peter Chamberlin, who have both spent many years in senior roles within public sector organisations. Our conversation covers the excitement and concerns around AI, both from a citizen’s perspective and for those building public services. We discuss the UK government’s approach to addressing AI challenges with its pro-innovation mantra, and whether this creates the right environment for success.

Data Engineering Digest brings together the best content for data engineering professionals from the widest variety of industry thought leaders.

Confluent

MAY 10, 2024

Find out how regional director Ariel Gan went from sales development representative to regional director in his career in B2B tech sales at Confluent.

DataKitchen

MAY 10, 2024

The Five Use Cases in Data Observability: Effective Data Anomaly Monitoring (#2) Introduction Ensuring the accuracy and timeliness of data ingestion is a cornerstone for maintaining the integrity of data systems. Data ingestion monitoring, a critical aspect of Data Observability, plays a pivotal role by providing continuous updates and ensuring high-quality data feeds into your systems.

Knowledge Hut

MAY 10, 2024

When one ventures out into the professional world, it is natural to be overwhelmed by the various job options available. There is an immense scope of employment for educated individuals in a service-based business requiring their skills and knowledge across different industries. A service-oriented business gives utmost importance to the quality of service delivered to its customer.

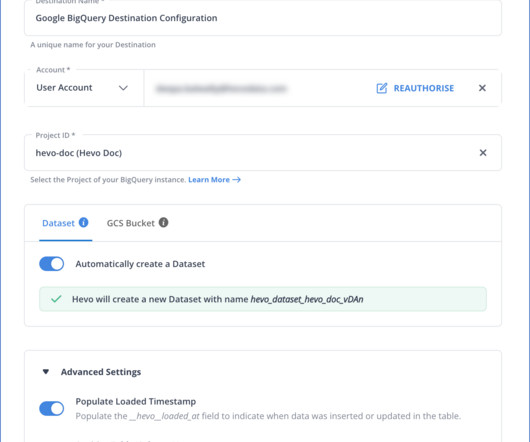

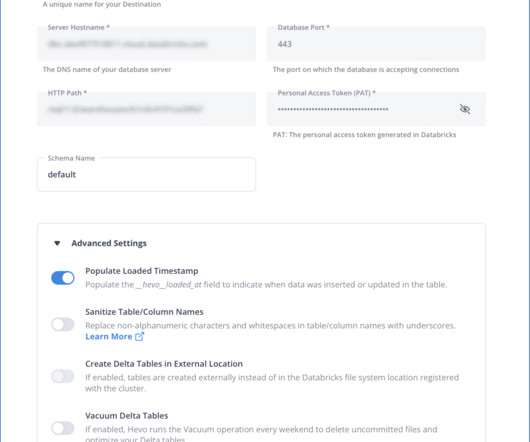

Hevo

MAY 10, 2024

Analyzing vast volumes of data can be challenging. Google BigQuery is a powerful tool that enables you to store, process, and analyze large datasets with ease. However, it may only provide some of the functionalities and tools needed for complex analysis. This is where Databricks steps in.

Speaker: Alex Salazar, CEO & Co-Founder @ Arcade | Nate Barbettini, Founding Engineer @ Arcade | Tony Karrer, Founder & CTO @ Aggregage

There’s a lot of noise surrounding the ability of AI agents to connect to your tools, systems and data. But building an AI application into a reliable, secure workflow agent isn’t as simple as plugging in an API. As an engineering leader, it can be challenging to make sense of this evolving landscape, but agent tooling provides such high value that it’s critical we figure out how to move forward.

Knowledge Hut

MAY 10, 2024

Django is a Python web framework that allows you to create interactive websites and applications. You can easily build Python web applications with Django and rely on the framework to do a lot of the heavy lifting for you. And Ubuntu provides an environment that is secure and stable with extensive Python and its dependencies support, making it an ideal platform for Django development.

Hevo

MAY 10, 2024

Organizations often manage operational data using open-source databases like MySQL, frequently deployed on local machines. To enhance data management and security, many organizations prefer deploying these databases on cloud providers like AWS, Azure, or Google Cloud Platform (GCP).

DataKitchen

MAY 10, 2024

The Five Use Cases in Data Observability: Ensuring Data Quality in New Data Sources (#1) Introduction to Data Evaluation in Data Observability Ensuring their quality and integrity before incorporating new data sources into production is paramount. Data evaluation serves as a safeguard, ensuring that only cleansed and reliable data makes its way into your systems, thus maintaining the overall health of your data ecosystem.

Hevo

MAY 10, 2024

GCP Postgres is a fully managed database service that excels at managing relational data. Databricks, on the other hand, is a unified analytics service that offers effective tools for data engineering, data science, and machine learning. You can integrate data from GCP Postgres to Databricks to leverage the combined strengths of both platforms.

Speaker: Andrew Skoog, Founder of MachinistX & President of Hexis Representatives

Manufacturing is evolving, and the right technology can empower—not replace—your workforce. Smart automation and AI-driven software are revolutionizing decision-making, optimizing processes, and improving efficiency. But how do you implement these tools with confidence and ensure they complement human expertise rather than override it? Join industry expert Andrew Skoog as he explores how manufacturers can leverage automation to enhance operations, streamline workflows, and make smarter, data-dri

DataKitchen

MAY 10, 2024

The Five Use Cases in Data Observability: Mastering Data Production (#3) Introduction Managing the production phase of data analytics is a daunting challenge. Overseeing multi-tool, multi-dataset, and multi-hop data processes ensures high-quality outputs. This blog explores the third of five critical use cases for Data Observability and Quality Validation—data Production—highlighting how DataKitchen’s Open-Source Data Observability solutions empower organizations to manage this critical s

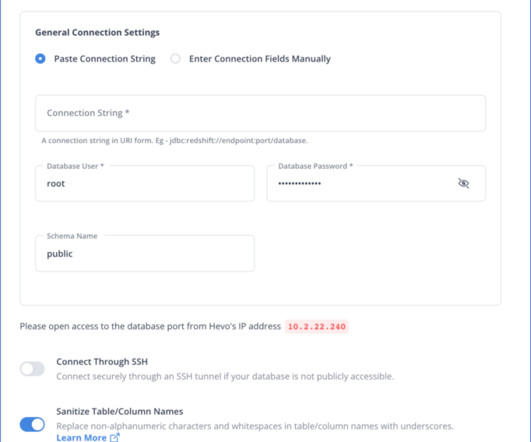

Hevo

MAY 10, 2024

Data migration from one instance of a data warehouse to another is essential to consider if you want to optimize cost, improve performance, and consolidate operations in a single place. Amazon Redshift is a cloud data warehousing service that allows you to deploy your application while securely storing your data.

DataKitchen

MAY 10, 2024

The Five Use Cases in Data Observability: Fast, Safe Development and Deployment (#4) Introduction The integrity and functionality of new code, tools, and configurations during the development and deployment stages are crucial. This blog post delves into the third critical use case for Data Observation and Data Quality Validation: development and Deployment.

Hevo

MAY 10, 2024

With increasing data volumes available from various sources, there is a rise in the demand for relational databases with improved scalability and performance for managing this data. Google Cloud MySQL (GCP MySQL) is one such reliable platform that caters to these needs by efficiently storing and managing data.

Advertisement

With Airflow being the open-source standard for workflow orchestration, knowing how to write Airflow DAGs has become an essential skill for every data engineer. This eBook provides a comprehensive overview of DAG writing features with plenty of example code. You’ll learn how to: Understand the building blocks DAGs, combine them in complex pipelines, and schedule your DAG to run exactly when you want it to Write DAGs that adapt to your data at runtime and set up alerts and notifications Scale you

DataKitchen

MAY 10, 2024

The post The Five Use Cases in Data Observability: Ensuring Accuracy in Data Migration first appeared on DataKitchen.

Hevo

MAY 10, 2024

With Google Cloud Platform (GCP) MySQL, businesses can manage relational databases with more stability and scalability. GCP MySQL provides dependable data storage and effective query processing. However, enterprises can run into constraints with GCP MySQL, such as agility and scalability issues, performance constraints, and manual resource management requirements.

Knowledge Hut

MAY 10, 2024

In the dynamic business environment of current times, existing business organizations aggressively seek to upgrade or change their practices, and startups begin with the best practices of the processes. Both need the route of the Project to accomplish their objective. So, what is a project in this dynamic business environment? Projects are, in short, vehicles of change.

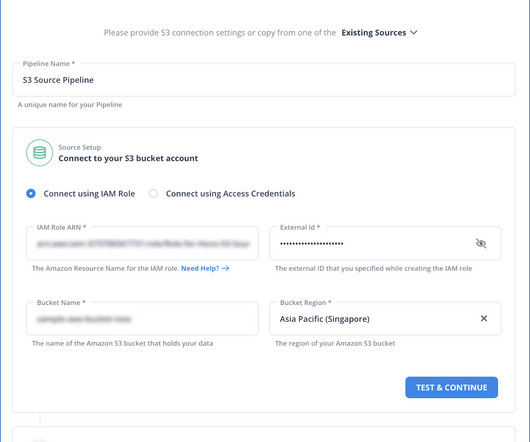

Hevo

MAY 10, 2024

Amazon S3 is a prominent data storage platform with multiple storage and security features. Integrating data stored in Amazon S3 to a data warehouse like Databricks can enable better data-driven decisions. As Databricks offers a collaborative environment, you can quickly and cost-effectively build machine-learning applications with your team.

Speaker: Ben Epstein, Stealth Founder & CTO | Tony Karrer, Founder & CTO, Aggregage

When tasked with building a fundamentally new product line with deeper insights than previously achievable for a high-value client, Ben Epstein and his team faced a significant challenge: how to harness LLMs to produce consistent, high-accuracy outputs at scale. In this new session, Ben will share how he and his team engineered a system (based on proven software engineering approaches) that employs reproducible test variations (via temperature 0 and fixed seeds), and enables non-LLM evaluation m

Hevo

MAY 10, 2024

Being a cross-platform document-first NoSQL database program, MongoDB operates on JSON-like documents. On the other hand, JDBC is a Java application programming interface (API) used while executing queries in association with the database. Using JDBC, you can seamlessly access any data source from any relational database in spreadsheet format or a flat file.

Hevo

MAY 10, 2024

Companies use Data Warehouses to store and analyze their business data to make data-driven business decisions. Querying through huge volumes of data and reach to a specific piece of data can be challenging if the queries are not optimized or data is not well organized.

Hevo

MAY 10, 2024

Azure MySQL is a MySQL service managed by Microsoft. It is a cost-effective relational data management platform that handles transactional workloads. However, it has limited scalability and analytics features. This is where Google BigQuery can appear to be a suitable option. Bigquery can handle data on a petabyte scale.

Hevo

MAY 10, 2024

With software supported in the cloud, many companies prefer to store their on-premise data on a database management service such as Azure MySQL. Integrating this data with a cloud analytics platform like Databricks can enable organizations to produce efficient results through data modeling.

Advertisement

Apache Airflow® is the open-source standard to manage workflows as code. It is a versatile tool used in companies across the world from agile startups to tech giants to flagship enterprises across all industries. Due to its widespread adoption, Airflow knowledge is paramount to success in the field of data engineering.

Hevo

MAY 10, 2024

In today’s data-rich world, businesses must select the right data storage and analysis platform. For many, Heroku PostgreSQL has long been a trusted solution, offering a reliable relational database service in the cloud.

Hevo

MAY 10, 2024

Organizations accumulate vast volumes of information from various sources. This data includes customer transactions, financial records, social media interactions, sensor readings, and more. Effective management and utilization of data are crucial to gaining insights, improving decision-making, and achieving business objectives. Enterprise data repository (EDR) plays a vital role in managing high-volume data.

Hevo

MAY 10, 2024

Data visualization has become one of the most critical skills companies require to generate insightful reports. Visualizing data with the help of a programming language can take a lot of time, so organizations prefer to use data visualization tools to achieve fast and efficient results.

Hevo

MAY 10, 2024

Companies use Data Warehouses to store all their business data from multiple data sources in one place to run analysis and generate valuable insights from it.

Speaker: Tamara Fingerlin, Developer Advocate

In this new webinar, Tamara Fingerlin, Developer Advocate, will walk you through many Airflow best practices and advanced features that can help you make your pipelines more manageable, adaptive, and robust. She'll focus on how to write best-in-class Airflow DAGs using the latest Airflow features like dynamic task mapping and data-driven scheduling!

Let's personalize your content