5 Simple Steps to Automate Data Cleaning with Python

KDnuggets

MAY 3, 2024

Automate your data cleaning process with a practical 5-step pipeline in Python, ideal for beginners.

KDnuggets

MAY 3, 2024

Automate your data cleaning process with a practical 5-step pipeline in Python, ideal for beginners.

databricks

MAY 3, 2024

Moving generative AI applications from the proof of concept stage into production requires control, reliability and data governance. Organizations are turning to open.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Knowledge Hut

MAY 3, 2024

Agile methodology is a simple, flexible, and iterative product development model with the distinct advantages of accommodating new requirement changes and incorporating the feedback of the previous iterations over the traditional waterfall development model. Agile methodology is the most popular and dynamic software product development and project maintenance model.

Towards Data Science

MAY 3, 2024

A deep dive into the various SCD types and how they can be implemented in Data Warehouses Continue reading on Towards Data Science »

Speaker: Jason Chester, Director, Product Management

In today’s manufacturing landscape, staying competitive means moving beyond reactive quality checks and toward real-time, data-driven process control. But what does true manufacturing process optimization look like—and why is it more urgent now than ever? Join Jason Chester in this new, thought-provoking session on how modern manufacturers are rethinking quality operations from the ground up.

Knowledge Hut

MAY 3, 2024

Any coding interview is a test that primarily focuses on your technical skills and algorithm knowledge. However, if you want to stand out among the hundreds of interviewees, you should know how to use the common functionalities of Python in a convenient manner. Before moving ahead, read about Self in Python and what is markdown ! The type of interview you might face can be a remote coding challenge, a whiteboard challenge or a full day on-site interview.

KDnuggets

MAY 3, 2024

Start a new career with Meta’s Data Analyst Certification and be job-ready in 5 months or less!

Data Engineering Digest brings together the best content for data engineering professionals from the widest variety of industry thought leaders.

Cloudera

MAY 3, 2024

One of the worst-kept secrets among data scientists and AI engineers is that no one starts a new project from scratch. In the age of information there are thousands of examples available when starting a new project. As a result, data scientists will often begin a project by developing an understanding of the data and the problem space and will then go out and find an example that is closest to what they are trying to accomplish.

Knowledge Hut

MAY 3, 2024

Organizations deal with lots of data regularly. But in case you are not able to access or connect with that important data, you are not yielding anything. You are keeping your organizations away from getting the value. Practical Uses of Power BI Microsoft Power BI will help you solve this problem with the help of a powerful business intelligence tool that mainly stresses on Visualization.

Cloudera

MAY 3, 2024

One of the worst-kept secrets among data scientists and AI engineers is that no one starts a new project from scratch. In the age of information there are thousands of examples available when starting a new project. As a result, data scientists will often begin a project by developing an understanding of the data and the problem space and will then go out and find an example that is closest to what they are trying to accomplish.

Knowledge Hut

MAY 3, 2024

Machine Learning is an interdisciplinary field of study and is a sub-domain of Artificial Intelligence. It gives computers the ability to learn and infer from a huge amount of homogeneous data, without having to be programmed explicitly. Before dwelling on this article, let's know more about r squared meaning here. Types of Machine Learning: Machine Learning can broadly be classified into three types: Supervised Learning: If the available dataset has predefined features and labels, on which

Speaker: Kenten Danas, Senior Manager, Developer Relations

ETL and ELT are some of the most common data engineering use cases, but can come with challenges like scaling, connectivity to other systems, and dynamically adapting to changing data sources. Airflow is specifically designed for moving and transforming data in ETL/ELT pipelines, and new features in Airflow 3.0 like assets, backfills, and event-driven scheduling make orchestrating ETL/ELT pipelines easier than ever!

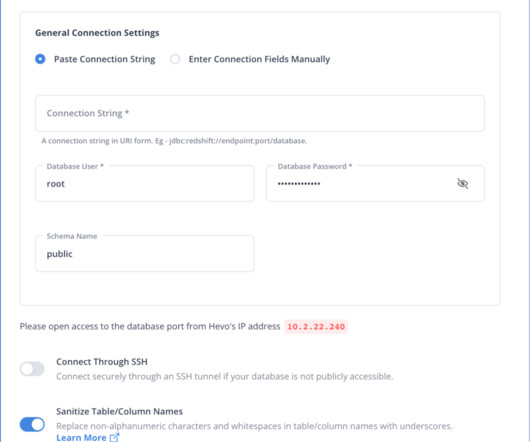

Hevo

MAY 3, 2024

Data integration is an essential task in most organizations. The reason is that many organizations are generating huge volumes of data. This data is not always stored in a single location, but in different locations including in on-premise databases and in the cloud.

Knowledge Hut

MAY 3, 2024

Introduction Before getting into the fundamentals of Apache Spark, let’s understand What really is ‘Apache Spark’ is? Following is the authentic one-liner definition. Apache Spark is a fast and general-purpose, cluster computing system. One would find multiple definitions when you search the term Apache Spark. All of those give similar gist, just different words.

Hevo

MAY 3, 2024

Insights generation from in-house data has become one of the most critical steps for any business. Integrating data from a database into a data warehouse enables companies to obtain essential factors influencing their operations and understand patterns that can boost business performance.

FreshBI

MAY 3, 2024

For businesses that derive their revenue from Manufacturing or Distribution, the choice for ERP includes MS Dynamics 365 Biz Central, SAP Biz One Pro, SYSPRO, Netsuite, Acumatica. The purpose of this blog is to provide an example of how a manufacturing operation can use Business Intelligence (BI) anchored in its economic engine, to inform the ERP selection process.

Advertisement

Apache Airflow® 3.0, the most anticipated Airflow release yet, officially launched this April. As the de facto standard for data orchestration, Airflow is trusted by over 77,000 organizations to power everything from advanced analytics to production AI and MLOps. With the 3.0 release, the top-requested features from the community were delivered, including a revamped UI for easier navigation, stronger security, and greater flexibility to run tasks anywhere at any time.

Hevo

MAY 3, 2024

Data is a powerful tool for organizational success today. When used effectively, it provides valuable insights into everyday operations to maximize business value. However, businesses may face data storage and processing challenges in a data-rich world.

Monte Carlo

MAY 3, 2024

It’s 2024, and the data estate has changed. Data systems are more diverse. Architectures are more complex. And with the acceleration of AI, that’s not changing any time soon. But even though the data landscape is evolving, many enterprise data organizations are still managing data quality the “old” way: with simple data quality monitoring. The basics haven’t changed: high-quality data is still critical to successful business operations.

Hevo

MAY 3, 2024

Imagine you are managing a rapidly growing e-commerce platform. That platform generates a large amount of data related to transactions, customer interactions, product details, feedback, and more. Azure Database for MySQL can efficiently handle your transactional data.

Cloudyard

MAY 3, 2024

Read Time: 1 Minute, 32 Second Last week, I introduced a stored procedure called DYNAMIC_MERGE , which dynamically retrieved column names from a staging table and used them to construct a MERGE INTO statement. While this approach offered flexibility, it had a limitation – the HASH condition used static column names. Hence relying on static column names, limiting the procedure’s adaptability across different tables.

Speaker: Tamara Fingerlin, Developer Advocate

Apache Airflow® 3.0, the most anticipated Airflow release yet, officially launched this April. As the de facto standard for data orchestration, Airflow is trusted by over 77,000 organizations to power everything from advanced analytics to production AI and MLOps. With the 3.0 release, the top-requested features from the community were delivered, including a revamped UI for easier navigation, stronger security, and greater flexibility to run tasks anywhere at any time.

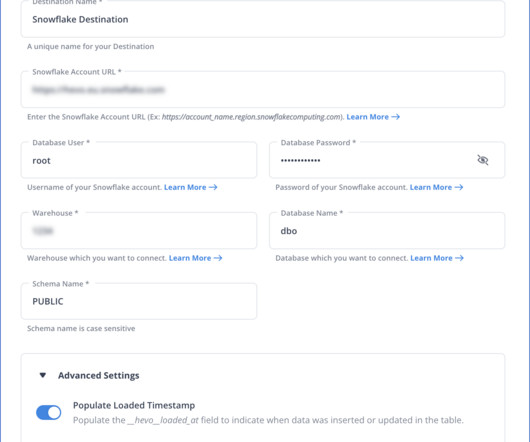

Hevo

MAY 3, 2024

In today’s digital era, businesses continually look for ways to manage their data assets. Azure Database for MySQL is a robust storage solution that manages relational data. However, as your business grows and data becomes more complex, managing and analyzing it becomes more challenging. This is where Snowflake comes in.

Knowledge Hut

MAY 3, 2024

Whenever you visit a pharmacy and ask for a particular medicine, have you noticed something? It hardly takes any time for the pharmacist to find it among several medicines. This is because all the items are arranged in a certain fashion which helps them know the exact place to look for. They may be arranged in alphabetical order or according to their category such as ophthalmic or neuro or gastroenterology and so on.

Hevo

MAY 3, 2024

If your organization is data-driven, it is important to understand your data’s origin, movement, and transformation. This imparts transparency within your organization, ensures data integrity, and enables informed decision-making. You can use data lineage for this.

Knowledge Hut

MAY 3, 2024

One of the most important decisions for Big data learners or beginners is choosing the best programming language for big data manipulation and analysis. Understanding business problems and choosing the right model is not enough, but implementing them perfectly is equally important and choosing the right language (or languages) for solving the problem goes a long way.

Advertisement

In Airflow, DAGs (your data pipelines) support nearly every use case. As these workflows grow in complexity and scale, efficiently identifying and resolving issues becomes a critical skill for every data engineer. This is a comprehensive guide with best practices and examples to debugging Airflow DAGs. You’ll learn how to: Create a standardized process for debugging to quickly diagnose errors in your DAGs Identify common issues with DAGs, tasks, and connections Distinguish between Airflow-relate

Hevo

MAY 3, 2024

Most organizations today practice a data-driven culture, emphasizing the importance of evidence-based decisions. You can also utilize the data available about your organization to perform various analyses and make data-informed decisions, contributing towards sustainable business growth.

Knowledge Hut

MAY 3, 2024

Sequence is one of the most basic data types in Python. Every element of a sequence is allocated a unique number called its position or index. The first designated index is zero, the second index is one, and so forth. Although Python comes with six types of pre-installed sequences, the most used ones are lists and tuples, and in this article, we would be discussing lists and their methods.

Hevo

MAY 3, 2024

Cloud solutions like AWS RDS for Oracle offer improved accessibility and robust security features. However, as data volumes grow, analyzing data on the AWS RDS Oracle database through multiple SQL queries can lead to inconsistency and performance degradation.

Knowledge Hut

MAY 3, 2024

What is Tableau? Tableau is a business intelligence and data visualization software. It can create interactive visualizations, dashboards, and reports from any data. Tableau is available in both cloud and desktop versions. The cloud version is subscription-based, while the desktop version is a one-time purchase. Tableau has been recognized as the leading BI and data visualization tool by Forbes, Fortune, and Gartner.

Speaker: Alex Salazar, CEO & Co-Founder @ Arcade | Nate Barbettini, Founding Engineer @ Arcade | Tony Karrer, Founder & CTO @ Aggregage

There’s a lot of noise surrounding the ability of AI agents to connect to your tools, systems and data. But building an AI application into a reliable, secure workflow agent isn’t as simple as plugging in an API. As an engineering leader, it can be challenging to make sense of this evolving landscape, but agent tooling provides such high value that it’s critical we figure out how to move forward.

Hevo

MAY 3, 2024

While AWS RDS Oracle offers a robust relational database solution over the cloud, Databricks simplifies big data processing with features such as automated scheduling and optimized Spark clusters. Integrating data from AWS RDS Oracle to Databricks enables you to handle large volumes of data within a collaborative workspace to derive actionable insights in real-time.

Knowledge Hut

MAY 3, 2024

Power BI is a business analytics service by Microsoft that provides users with Data Visualization and Business Intelligence tools with an elementary interface, simple for end-users so that they create reports and dashboards of their own. Microsoft Power BI Course helps to find insights within the data of an organisation. It converts data from various data sources to interactive BI reports and dashboards, like it forms different data models, creates graphs and charts which depict visuals of the d

Hevo

MAY 3, 2024

Many organizations today heavily rely on data to make business-related decisions. Data is an invaluable asset that helps you substantiate your convictions with evidence and facilitates stakeholder buy-in. However, ensuring your data is of high quality is paramount as it directly correlates to the accuracy of the desired results.

Knowledge Hut

MAY 3, 2024

It gets difficult to understand messed-up handwriting, similarly, an unreadable and unstructured code is not accepted by all. However, you can benefit as a programmer only when you can express better with your code. This is where PEP comes to the rescue. Python Enhancement Proposal or PEP is a design document that provides information to the Python community and also describes new features and document aspects, such as style and design for Python.

Speaker: Andrew Skoog, Founder of MachinistX & President of Hexis Representatives

Manufacturing is evolving, and the right technology can empower—not replace—your workforce. Smart automation and AI-driven software are revolutionizing decision-making, optimizing processes, and improving efficiency. But how do you implement these tools with confidence and ensure they complement human expertise rather than override it? Join industry expert Andrew Skoog as he explores how manufacturers can leverage automation to enhance operations, streamline workflows, and make smarter, data-dri

Let's personalize your content