Free Courses That Are Actually Free: Data Analytics Edition

KDnuggets

SEPTEMBER 11, 2024

Kickstart your data analyst career with all these free courses.

KDnuggets

SEPTEMBER 11, 2024

Kickstart your data analyst career with all these free courses.

databricks

SEPTEMBER 11, 2024

We are excited to announce that Databricks was named one of the 2024 Fortune Best Workplaces in Technology™. This award reflects our.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

KDnuggets

SEPTEMBER 11, 2024

Access a pre-built Python environment with free GPUs, persistent storage, and large RAM. These Cloud IDEs include AI code assistants and numerous plugins for a fast and efficient development experience.

databricks

SEPTEMBER 11, 2024

Personal Access Tokens (PATs) are a convenient way to access services like Azure Databricks or Azure DevOps without logging in with your password.

Advertisement

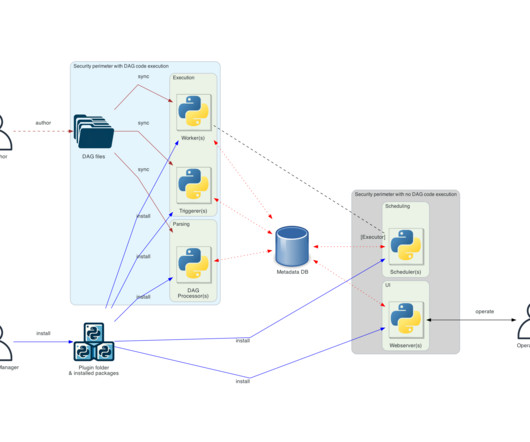

In Airflow, DAGs (your data pipelines) support nearly every use case. As these workflows grow in complexity and scale, efficiently identifying and resolving issues becomes a critical skill for every data engineer. This is a comprehensive guide with best practices and examples to debugging Airflow DAGs. You’ll learn how to: Create a standardized process for debugging to quickly diagnose errors in your DAGs Identify common issues with DAGs, tasks, and connections Distinguish between Airflow-relate

KDnuggets

SEPTEMBER 11, 2024

Learn how to create a data science pipeline with a complete structure.

Striim

SEPTEMBER 11, 2024

Data pipelines are the backbone of your business’s data architecture. Implementing a robust and scalable pipeline ensures you can effectively manage, analyze, and organize your growing data. Most importantly, these pipelines enable your team to transform data into actionable insights, demonstrating tangible business value. According to an IBM study, businesses expect that fast data will enable them to “make better informed decisions using insights from analytics (44%), improved data quality and

Data Engineering Digest brings together the best content for data engineering professionals from the widest variety of industry thought leaders.

Towards Data Science

SEPTEMBER 11, 2024

One answer and many best practices for how larger organizations can operationalizing data quality programs for modern data platforms An answer to “who does what” for enterprise data quality. Image courtesy of the author. I’ve spoken with dozens of enterprise data professionals at the world’s largest corporations, and one of the most common data quality questions is, “who does what?

Tweag

SEPTEMBER 11, 2024

We’ve all been there: wasting a couple of days on a silly bug. Good news for you: formal methods have never been easier to leverage. In this post, I will discuss the contributions I made during my internship to Liquid Haskell (LH), a tool that makes proving that your Haskell code is correct a piece of cake. LH lets you write contracts for your functions inside your Haskell code.

Confluent

SEPTEMBER 11, 2024

To make application testing for topics with schemas easier, you can now produce messages that are serialized with schemas using the Confluent Cloud Console UI.

Scott Logic

SEPTEMBER 11, 2024

In February of this year, Scott Logic announced our proposed Technology Carbon Standard , setting out an approach to describing an organisation’s technology footprint. This standard has proven invaluable in mapping our own carbon footprint, as well as those of clients we’ve worked with. As awareness of the environmental impact of digital infrastructure grows, it has become crucial to understand and manage technology-related emissions.

Speaker: Tamara Fingerlin, Developer Advocate

Apache Airflow® 3.0, the most anticipated Airflow release yet, officially launched this April. As the de facto standard for data orchestration, Airflow is trusted by over 77,000 organizations to power everything from advanced analytics to production AI and MLOps. With the 3.0 release, the top-requested features from the community were delivered, including a revamped UI for easier navigation, stronger security, and greater flexibility to run tasks anywhere at any time.

Cloudera

SEPTEMBER 11, 2024

Imagine a world where your sensitive data moves effortlessly between clouds – secure, private, and far from the prying eyes of the public internet. Today, we’re making that world a reality with the launch of Cloudera Private Link Network. Organizations are continuously seeking ways to enhance their data security. One of the challenges is ensuring that data remains protected as it traverses different cloud environments.

Hevo

SEPTEMBER 11, 2024

ETL tools have become important in efficiently handling integrated data. In this blog, we will discuss Fivetran vs AWS Glue, two influential ETL tools on the market. This will help you gain a comprehensive understanding of the product’s features, pricing models, and real-world use cases, helping you choose the right solution.

Cloudyard

SEPTEMBER 11, 2024

Read Time: 1 Minute, 52 Second In this use case, a financial services company has decided to migrate its data warehouse from Oracle to Snowflake. The migration involves not only migrating the data from Oracle to Snowflake but also replicating all views in Snowflake. After successfully migrating several views, the data engineering team noticed discrepancy between the Oracle view definitions and their Snowflake counterparts.

Hevo

SEPTEMBER 11, 2024

Data pipelines and workflows have become an inherent part of the advancements in data engineering, machine learning, and DevOps processes. With ever-increasing scales and complexity, the need to orchestrate these workflows efficiently arises. That is where Apache Airflow steps in —an open-source platform designed to programmatically author, schedule, and monitor workflows.

Speaker: Alex Salazar, CEO & Co-Founder @ Arcade | Nate Barbettini, Founding Engineer @ Arcade | Tony Karrer, Founder & CTO @ Aggregage

There’s a lot of noise surrounding the ability of AI agents to connect to your tools, systems and data. But building an AI application into a reliable, secure workflow agent isn’t as simple as plugging in an API. As an engineering leader, it can be challenging to make sense of this evolving landscape, but agent tooling provides such high value that it’s critical we figure out how to move forward.

Hevo

SEPTEMBER 11, 2024

1GB of data was referred to as big data in 1999. Nowadays, the term is used for petabytes or even exabytes of data (1024 Petabytes), close to trillions of records from billions of people. In this fast-moving landscape, the key to making a difference is picking up the correct data storage solution for your business.

Hevo

SEPTEMBER 11, 2024

A Data Pipeline is an indispensable part of a data engineering workflow. It enables the extraction, transformation, and storage of data across disparate data sources and ensures that the right data is available at the right time.

Hevo

SEPTEMBER 11, 2024

We’re excited to announce that Hevo Data has achieved the prestigious Snowflake Ready Technology Validation certification! This recognition solidifies our commitment to delivering top-notch data integration solutions that seamlessly work with Snowflake, a leading AI Data Cloud. What is the Snowflake Ready Technology Validation Program?

Let's personalize your content