5 Super Cheat Sheets to Master Data Science

KDnuggets

DECEMBER 8, 2023

The collection of super cheat sheets covers basic concepts of data science, probability & statistics, SQL, machine learning, and deep learning.

KDnuggets

DECEMBER 8, 2023

The collection of super cheat sheets covers basic concepts of data science, probability & statistics, SQL, machine learning, and deep learning.

databricks

DECEMBER 8, 2023

Retrieval Augmented Generation (RAG) is an efficient mechanism to provide relevant data as context in Gen AI applications. Most RAG applications typically use.

KDnuggets

DECEMBER 8, 2023

OpenAI revolutionizes personal AI customization with its no-code approach to creating custom ChatGPTs.

Towards Data Science

DECEMBER 8, 2023

Leverage some simple equations to generate related columns in test tables. Image generated with DALL-E 3 I’ve recently been playing around with Databricks Labs Data Generator to create completely synthetic datasets from scratch. As part of this, I’ve looked at building sales data around different stores, employees, and customers. As such, I wanted to create relationships between the columns I was artificially populating — such as mapping employees and customers to a certain store.

Advertisement

Whether you’re creating complex dashboards or fine-tuning large language models, your data must be extracted, transformed, and loaded. ETL and ELT pipelines form the foundation of any data product, and Airflow is the open-source data orchestrator specifically designed for moving and transforming data in ETL and ELT pipelines. This eBook covers: An overview of ETL vs.

KDnuggets

DECEMBER 8, 2023

Google has introduced a revamped AI model that is said to outperform ChatGPT. Let’s learn more.

Towards Data Science

DECEMBER 8, 2023

Clustering: A simple way to group similar rows and prevent unnecessary data processing Continue reading on Towards Data Science »

Data Engineering Digest brings together the best content for data engineering professionals from the widest variety of industry thought leaders.

Striim

DECEMBER 8, 2023

Note: To follow best practices guide, you must have the Persisted Streams add-on in Striim Cloud or Striim Platform. Introduction Change Data Capture (CDC) is a critical methodology, particularly in scenarios demanding real-time data integration and analytics. CDC is a technique designed to efficiently capture and track changes made in a source database, thereby enabling real-time data synchronization and streamlining the process of updating data warehouses, data lakes, or other systems.

databricks

DECEMBER 8, 2023

Enterprise leaders are turning to the Databricks Data Intelligence Platform to create a centralized source of high-quality data that business teams can leverage.

The Modern Data Company

DECEMBER 8, 2023

The post The Role of Data Products in Maximizing ROI from AI Initiatives appeared first on TheModernDataCompany.

Striim

DECEMBER 8, 2023

In today’s data-driven business landscape, the ability to effectively capture and utilize real-time data is paramount. Change Data Capture (CDC) is not just a technical process; it’s a gateway to unparalleled business efficiency and intelligence. Let’s explore how Striim’s ‘Read Once, Write Everywhere’ CDC pattern is revolutionizing how businesses handle data.

Speaker: Tamara Fingerlin, Developer Advocate

In this new webinar, Tamara Fingerlin, Developer Advocate, will walk you through many Airflow best practices and advanced features that can help you make your pipelines more manageable, adaptive, and robust. She'll focus on how to write best-in-class Airflow DAGs using the latest Airflow features like dynamic task mapping and data-driven scheduling!

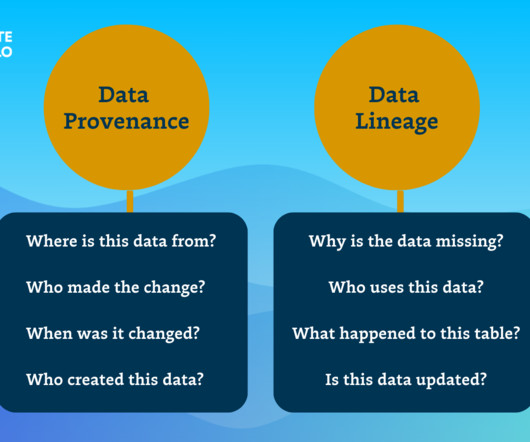

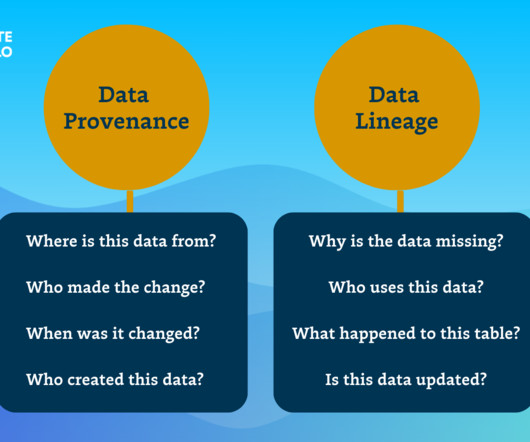

Monte Carlo

DECEMBER 8, 2023

What’s something you never want your data to be? Mysterious. The best starting place to make sure you really know your data is, well, your data’s starting place – wherever that may be. For data teams, it’s essential to know your data from the source throughout its entire lifecycle. But, as data moves and is transformed through the pipeline, it can become increasingly complex to trace its journey.

Monte Carlo

DECEMBER 8, 2023

What’s something you never want your data to be? Mysterious. The best starting place to make sure you really know your data is, well, your data’s starting place – wherever that may be. For data teams, it’s essential to know your data from the source throughout its entire lifecycle. But, as data moves and is transformed through the pipeline, it can become increasingly complex to trace its journey.

Let's personalize your content