Beginner’s Guide to Machine Learning Testing With DeepChecks

KDnuggets

JUNE 19, 2024

Perform data integrity tests and generate model evaluation reports by writing a few lines of code.

KDnuggets

JUNE 19, 2024

Perform data integrity tests and generate model evaluation reports by writing a few lines of code.

Engineering at Meta

JUNE 19, 2024

We’re introducing parameter vulnerability factor (PVF) , a novel metric for understanding and measuring AI systems’ vulnerability against silent data corruptions (SDCs) in model parameters. PVF can be tailored to different AI models and tasks, adapted to different hardware faults, and even extended to the training phase of AI models. We’re sharing results of our own case studies using PVF to measure the impact of SDCs in model parameters, as well as potential methods of identifying SDCs in model

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

KDnuggets

JUNE 19, 2024

Learn about enhancing LLMs with real-time information retrieval and intelligent agents.

Precisely

JUNE 19, 2024

Key Takeaways: Adopting both CCM and CRM platforms can significantly enhance your customer experience through personalized communications, automated workflows, and consistent messaging across channels. Automating repetitive communication tasks cuts back on manual efforts to save you time and reduce costs. As consumer expectations for efficient and personalized experiences continue to rise, effectively managing customer relationships and communications is more crucial than ever.

Advertisement

In Airflow, DAGs (your data pipelines) support nearly every use case. As these workflows grow in complexity and scale, efficiently identifying and resolving issues becomes a critical skill for every data engineer. This is a comprehensive guide with best practices and examples to debugging Airflow DAGs. You’ll learn how to: Create a standardized process for debugging to quickly diagnose errors in your DAGs Identify common issues with DAGs, tasks, and connections Distinguish between Airflow-relate

Scott Logic

JUNE 19, 2024

Introduction The Cloud Carbon Footprint tool was discussed in a previous blog post , as a third party method of estimating emissions associated with cloud workloads. However, moving to the cloud is not suitable for every workload - you may have reasons to keep your data on-premises for example. It’s also possible that your local energy is produced via greener methods than cloud providers, so moving to the cloud could result in greater carbon emissions, as well as generating electronic waste from

Hevo

JUNE 19, 2024

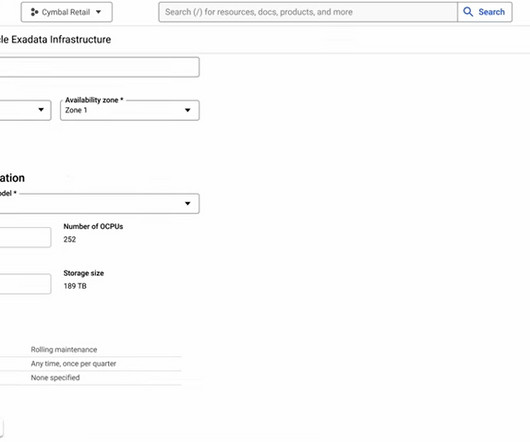

Oracle is widely used to store, manage, and perform complex operations on data, making it ideal for business-critical operations. You can efficiently scale your business data by hosting Oracle services on the Google Cloud Platform. GCP offers efficient resource utilization, which can be helpful when performing operations like data processing, analysis, and visualization.

Data Engineering Digest brings together the best content for data engineering professionals from the widest variety of industry thought leaders.

Hevo

JUNE 19, 2024

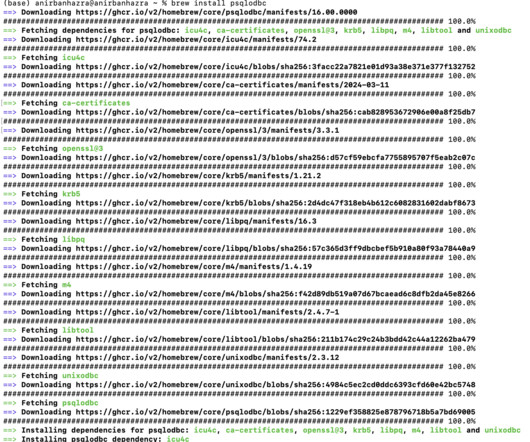

Many organizations are drawn to PostgreSQL’s robust features, open-source nature, and cost-effectiveness, and hence they look to migrate their data from their existing database to PostgreSQL. In this guide, we’ll discuss the Oracle to PostgreSQL migration process. Having done this migration several times, I understand the challenges and complexities of such a transition.

Hevo

JUNE 19, 2024

In today’s dynamic business landscape, mastering the art of data integration is not just a choice but a necessity. The wealth of valuable information resides in various sources, from corporate databases to customer interactions on your website, and for them, you need to be thorough with various types of integration.

Hevo

JUNE 19, 2024

In today’s dynamic business landscape, mastering the art of data integration is not just a choice but a necessity. The wealth of valuable information resides in various sources, from corporate databases to customer interactions on your website, and for them, you need to be thorough with various types of integration.

Hevo

JUNE 19, 2024

Debezium is an open-source, distributed system that can convert real-time changes of existing databases into event streams so that various applications can consume and respond immediately. Debezium uses connectors like PostgreSQL, SQL, MySQL, Oracle, MongoDB, and more for respective databases to stream such changes.

Speaker: Tamara Fingerlin, Developer Advocate

Apache Airflow® 3.0, the most anticipated Airflow release yet, officially launched this April. As the de facto standard for data orchestration, Airflow is trusted by over 77,000 organizations to power everything from advanced analytics to production AI and MLOps. With the 3.0 release, the top-requested features from the community were delivered, including a revamped UI for easier navigation, stronger security, and greater flexibility to run tasks anywhere at any time.

Hevo

JUNE 19, 2024

Organizations use Kafka and Debezium to track real-time changes in databases and stream them to different applications. But often, due to a colossal amount of messages in Kafka topics, it becomes challenging to serialize these messages. Every message in Kafka’s topic has a key and value.

Hevo

JUNE 19, 2024

With the ability to integrate data faster and at scale, AWS provides organizations with product offerings that are serverless and fully managed — indeed, very helpful for organizations that aim to further streamline their processes. And, one such product offering is AWS Glue Workflow.

Hevo

JUNE 19, 2024

In an ever-changing world that is increasingly dominated by data, it is more important now than ever before that data professionals create avenues in which one can connect to traditional data warehouses and today’s modern machine learning and AI platforms.

Hevo

JUNE 19, 2024

When it comes to migrating data from MongoDB to PostgreSQL, I’ve had my fair share of trying different methods and even making rookie mistakes, only to learn from them.

Speaker: Alex Salazar, CEO & Co-Founder @ Arcade | Nate Barbettini, Founding Engineer @ Arcade | Tony Karrer, Founder & CTO @ Aggregage

There’s a lot of noise surrounding the ability of AI agents to connect to your tools, systems and data. But building an AI application into a reliable, secure workflow agent isn’t as simple as plugging in an API. As an engineering leader, it can be challenging to make sense of this evolving landscape, but agent tooling provides such high value that it’s critical we figure out how to move forward.

Let's personalize your content