5 Free University Courses to Learn Machine Learning

KDnuggets

MAY 14, 2024

Want to learn machine learning from the best of resources? Check out these free machine learning courses from the top universities of the world.

KDnuggets

MAY 14, 2024

Want to learn machine learning from the best of resources? Check out these free machine learning courses from the top universities of the world.

databricks

MAY 14, 2024

We recently introduced DBRX : an open, state-of-the-art, general-purpose LLM. DBRX was trained, fine-tuned, and evaluated using Mosaic AI Training, scaling training to.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

KDnuggets

MAY 14, 2024

100% online master’s program with flexible schedules designed for working professionals. Enrolling now for October 28th.

ArcGIS

MAY 14, 2024

Prompt Segment Anything Model (SAM) with free form text to extract features in your imagery

Advertisement

In Airflow, DAGs (your data pipelines) support nearly every use case. As these workflows grow in complexity and scale, efficiently identifying and resolving issues becomes a critical skill for every data engineer. This is a comprehensive guide with best practices and examples to debugging Airflow DAGs. You’ll learn how to: Create a standardized process for debugging to quickly diagnose errors in your DAGs Identify common issues with DAGs, tasks, and connections Distinguish between Airflow-relate

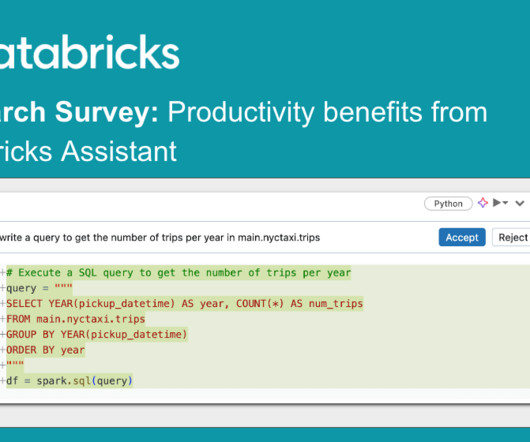

databricks

MAY 14, 2024

In the fast-paced landscape of data science and engineering, integrating Artificial Intelligence (AI) has become integral for enhancing productivity. We’ve seen many tools.

Snowflake

MAY 14, 2024

Did you know that Snowflake has five advanced role-based certifications to help you stand out in the data community as a Snowflake expert? The Snowflake Advanced Certification Series (Architect, Data Engineer, Data Scientist, Administrator, Data Analyst) offers role-based certifications designed for Snowflake practitioners with one to two years of experience (depending on the program).

Data Engineering Digest brings together the best content for data engineering professionals from the widest variety of industry thought leaders.

Snowflake

MAY 14, 2024

The pharmaceutical industry generates a great deal of identifiable data (such as clinical trial data, patient engagement data) that has guardrails around “use and access.” Data captured for the intended purpose of use described in a protocol is called “primary use.” However, once anonymized, this data can be used for other inferences in what we can collectively define as secondary analyses.

databricks

MAY 14, 2024

Successfully building GenAI applications means going beyond just leveraging the latest cutting-edge models. It requires the development of compound AI systems that integrate.

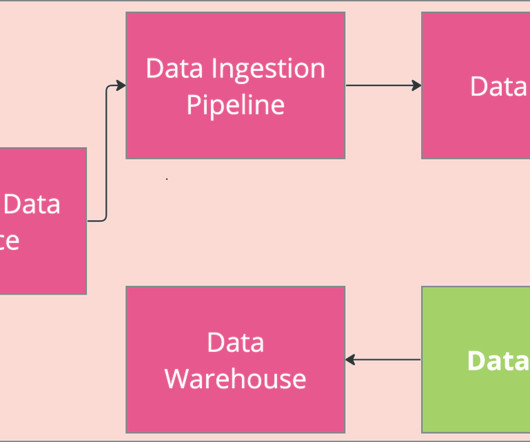

Towards Data Science

MAY 14, 2024

MLOps: Data Pipeline Orchestration Part 1 of Dataform 101: Fundamentals of a single repo, multi-environment Dataform with least-privilege access control and infrastructure as code setup A typical positioning of Dataform in a data pipeline [Image by author] Dataform is a new service integrated into the GCP suite of services which enables teams to develop and operationalise complex, SQL-based data pipelines.

Engineering at Meta

MAY 14, 2024

When Threads first launched one of the top feature requests was for a web client. In this episode of the Meta Tech Podcast, Pascal Hartig ( @passy ) sits down with Ally C. and Kevin C., two engineers on the Threads Web Team that delivered the basic version of Threads for web in just under three months. Ally and Kevin share how their team moved swiftly by leveraging Meta’s shared infrastructure and the nimble engineering practices of their colleagues who built Threads for iOS and Android.

Speaker: Tamara Fingerlin, Developer Advocate

Apache Airflow® 3.0, the most anticipated Airflow release yet, officially launched this April. As the de facto standard for data orchestration, Airflow is trusted by over 77,000 organizations to power everything from advanced analytics to production AI and MLOps. With the 3.0 release, the top-requested features from the community were delivered, including a revamped UI for easier navigation, stronger security, and greater flexibility to run tasks anywhere at any time.

DataKitchen

MAY 14, 2024

Data Observability and Data Quality Testing Certification Series We are excited to invite you to a free four-part webinar series that will elevate your understanding and skills in Data Observation and Data Quality Testing. This series is crafted for professionals eager to deepen their knowledge and enhance their data management practices, whether you are a seasoned data engineer, a data quality manager, or just passionate about data.

Confluent

MAY 14, 2024

Confluent introduces the CwC partner landscape and new program entrants for Q2 2024.

Precisely

MAY 14, 2024

Precisely kicked off the second in a series of quarterly Automate User Group events in Atlanta back in March. These user groups – also known as Inspiration Days – allow attendees to gain knowledge and share real-world results and insights with their peers. The interactive event brought Precisely Automate customers together for two jam-packed days of knowledge sharing and learning through presentations, demos from Precisely engineers, and Q&A discussions.

Confluent

MAY 14, 2024

Explore the practical applications of using the Destinations EventBridge API to send data in real time to Confluent, enabling a myriad of use cases. In the blog we will focus specifically on real-time analysis of AWS audit logs.

Speaker: Alex Salazar, CEO & Co-Founder @ Arcade | Nate Barbettini, Founding Engineer @ Arcade | Tony Karrer, Founder & CTO @ Aggregage

There’s a lot of noise surrounding the ability of AI agents to connect to your tools, systems and data. But building an AI application into a reliable, secure workflow agent isn’t as simple as plugging in an API. As an engineering leader, it can be challenging to make sense of this evolving landscape, but agent tooling provides such high value that it’s critical we figure out how to move forward.

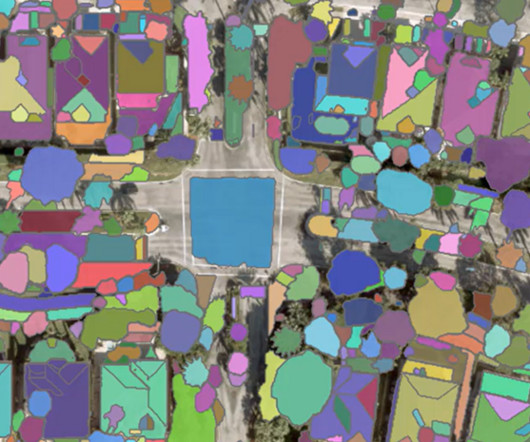

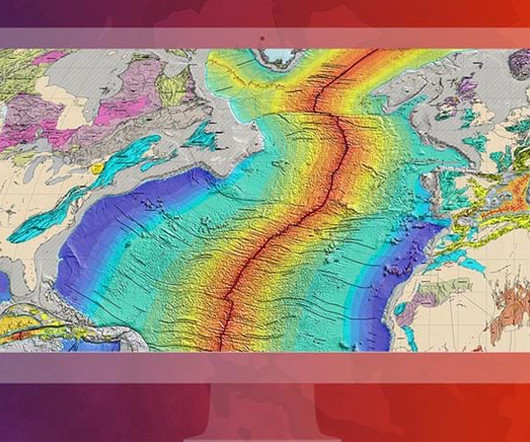

ArcGIS

MAY 14, 2024

Learn about our SAM pretrained deep learning model available in ArcGIS Living Atlas of the World.

Monte Carlo

MAY 14, 2024

As organizations seek greater value from their data, data architectures are evolving to meet the demand — and table formats are no exception. Modern table formats are far more than a collection of columns and rows. Depending on the quantity of data flowing through an organization’s pipeline — or the format the data typically takes — the right modern table format can help to make workflows more efficient, increase access, extend functionality, and even offer new opportunities to activate your uns

ArcGIS

MAY 14, 2024

Prompt Segment Anything Model (SAM) with free form text to extract features in your imagery

Hevo

MAY 14, 2024

Attending Snowflake Summit 2024? So are we! Here’s how you can connect with Hevo while you’re in San Francisco from June 3rd-6th. Explore this blog to discover everything you need to know about the Hevo booth, our speaker session, how to register, and much more.

Speaker: Andrew Skoog, Founder of MachinistX & President of Hexis Representatives

Manufacturing is evolving, and the right technology can empower—not replace—your workforce. Smart automation and AI-driven software are revolutionizing decision-making, optimizing processes, and improving efficiency. But how do you implement these tools with confidence and ensure they complement human expertise rather than override it? Join industry expert Andrew Skoog as he explores how manufacturers can leverage automation to enhance operations, streamline workflows, and make smarter, data-dri

Let's personalize your content