Top 5 Things Every Kafka Developer Should Know

Confluent

OCTOBER 16, 2020

Apache Kafka® is an event streaming platform used by more than 30% of the Fortune 500 today. There are numerous features of Kafka that make it the de-facto standard for […].

Confluent

OCTOBER 16, 2020

Apache Kafka® is an event streaming platform used by more than 30% of the Fortune 500 today. There are numerous features of Kafka that make it the de-facto standard for […].

Cloudera

OCTOBER 12, 2020

We are thrilled to announce that Cloudera has acquired Eventador , a provider of cloud-native services for enterprise-grade stream processing. Eventador, based in Austin, TX, was founded by Erik Beebe and Kenny Gorman in 2016 to address a fundamental business problem – make it simpler to build streaming applications built on real-time data. This typically involved a lot of coding with Java, Scala or similar technologies.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Start Data Engineering

OCTOBER 12, 2020

Table of Contents Table of Contents Introduction Design Setup Prerequisites Clone repository Get data Code Move data and script to the cloud create an EMR cluster add steps and wait to complete terminate EMR cluster Run the DAG Conclusion Further reading Introduction I have been asked and seen the questions how others are automating apache spark jobs on EMR how to submit spark jobs to an EMR cluster from Airflow ?

Teradata

OCTOBER 13, 2020

The most frequently asked question of Finance departments today is, ‘whose data do we trust’? Here’s how to ensure Finance always has the correct answer.

Speaker: Tamara Fingerlin, Developer Advocate

Apache Airflow® 3.0, the most anticipated Airflow release yet, officially launched this April. As the de facto standard for data orchestration, Airflow is trusted by over 77,000 organizations to power everything from advanced analytics to production AI and MLOps. With the 3.0 release, the top-requested features from the community were delivered, including a revamped UI for easier navigation, stronger security, and greater flexibility to run tasks anywhere at any time.

Netflix Tech

OCTOBER 29, 2020

Netflix Android and iOS Studio Apps?—?now powered by Kotlin Multiplatform By David Henry & Mel Yahya Over the last few years Netflix has been developing a mobile app called Prodicle to innovate in the physical production of TV shows and movies. The world of physical production is fast-paced, and needs vary significantly between the country, region, and even from one production to the next.

Data Engineering Podcast

OCTOBER 26, 2020

Summary One of the most challenging aspects of building a data platform has nothing to do with pipelines and transformations. If you are putting your workflows into production, then you need to consider how you are going to implement data security, including access controls and auditing. Different databases and storage systems all have their own method of restricting access, and they are not all compatible with each other.

Data Engineering Digest brings together the best content for data engineering professionals from the widest variety of industry thought leaders.

Cloudera

OCTOBER 1, 2020

Since 2013 the UK Government’s flagship ‘Cloud First’ policy has been at the forefront of enabling departments to shed their legacy IT architecture in order to meaningfully embrace digital transformation. The policy outlines that the cloud (and specifically, public cloud) be the default position for any new services; unless it can be demonstrated that other alternatives offer better value for money. .

Grouparoo

OCTOBER 26, 2020

When Brian, Evan, and I first talked about starting a company, we already had some ideas in mind about what we might want to do differently from our past roles. The three of us had all worked together before at TaskRabbit , but since we were starting a brand new company, we decided to approach how we would work from a first principles approach. I thought we’d share some tidbits about how we work right now.

Teradata

OCTOBER 15, 2020

The old paradigm of the data warehouse serving as the single source of truth in today's ever evolving data landscape can no longer be sustained. Find out why.

Netflix Tech

OCTOBER 27, 2020

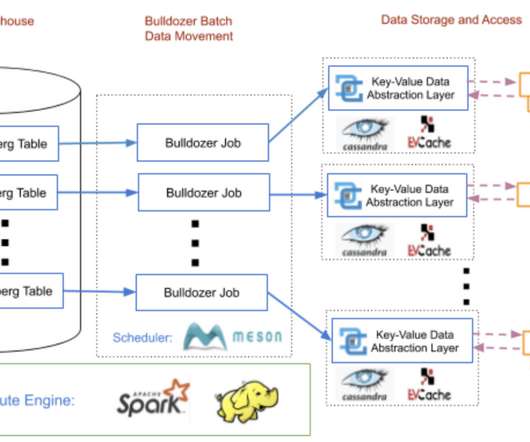

By Tianlong Chen and Ioannis Papapanagiotou Netflix has more than 195 million subscribers that generate petabytes of data everyday. Data scientists and engineers collect this data from our subscribers and videos, and implement data analytics models to discover customer behaviour with the goal of maximizing user joy. Usually Data scientists and engineers write Extract-Transform-Load (ETL) jobs and pipelines using big data compute technologies, like Spark or Presto , to process this data and perio

Speaker: Alex Salazar, CEO & Co-Founder @ Arcade | Nate Barbettini, Founding Engineer @ Arcade | Tony Karrer, Founder & CTO @ Aggregage

There’s a lot of noise surrounding the ability of AI agents to connect to your tools, systems and data. But building an AI application into a reliable, secure workflow agent isn’t as simple as plugging in an API. As an engineering leader, it can be challenging to make sense of this evolving landscape, but agent tooling provides such high value that it’s critical we figure out how to move forward.

Data Engineering Podcast

OCTOBER 19, 2020

Summary In order for analytics and machine learning projects to be useful, they require a high degree of data quality. To ensure that your pipelines are healthy you need a way to make them observable. In this episode Barr Moses and Lior Gavish, co-founders of Monte Carlo, share the leading causes of what they refer to as data downtime and how it manifests.

Confluent

OCTOBER 1, 2020

Each month, we’ve announced a set of Confluent features organized around what we think are the key foundational traits of cloud-native data systems as part of Project Metamorphosis. Data systems […].

Cloudera

OCTOBER 26, 2020

Growing up, were you ever told you can’t have it all? That you can’t eat all the snacks in one sitting? That you can’t watch the complete Back to the Future trilogy as well as study for your science exam in one evening? Over time, we learn to set priorities, make a decision for one thing over the other, and compromise. Just like when it comes to data access in business.

Rock the JVM

OCTOBER 25, 2020

Discover how Akka Typed revolutionizes actor protocol definitions and dramatically enhances actor mechanics

Speaker: Andrew Skoog, Founder of MachinistX & President of Hexis Representatives

Manufacturing is evolving, and the right technology can empower—not replace—your workforce. Smart automation and AI-driven software are revolutionizing decision-making, optimizing processes, and improving efficiency. But how do you implement these tools with confidence and ensure they complement human expertise rather than override it? Join industry expert Andrew Skoog as he explores how manufacturers can leverage automation to enhance operations, streamline workflows, and make smarter, data-dri

Teradata

OCTOBER 12, 2020

In honor of World Mental Health Day this past weekend, Shehzeen Rehman writes on the importance of de-stigmatizing mental health and learning how to seek help.

Netflix Tech

OCTOBER 19, 2020

by Maulik Pandey Our Team?—? Kevin Lew , Narayanan Arunachalam , Elizabeth Carretto , Dustin Haffner , Andrei Ushakov, Seth Katz , Greg Burrell , Ram Vaithilingam , Mike Smith and Maulik Pandey “ @Netflixhelps Why doesn’t Tiger King play on my phone?”?—?a Netflix member via Twitter This is an example of a question our on-call engineers need to answer to help resolve a member issue?

Data Engineering Podcast

OCTOBER 12, 2020

Summary Business intelligence efforts are only as useful as the outcomes that they inform. Power BI aims to reduce the time and effort required to go from information to action by providing an interface that encourages rapid iteration. In this episode Rob Collie shares his enthusiasm for the Power BI platform and how it stands out from other options.

Confluent

OCTOBER 27, 2020

As described in the blog post Apache Kafka® Needs No Keeper: Removing the Apache ZooKeeper Dependency, when KIP-500 lands next year, Apache Kafka will replace its usage of Apache ZooKeeper […].

Advertisement

With Airflow being the open-source standard for workflow orchestration, knowing how to write Airflow DAGs has become an essential skill for every data engineer. This eBook provides a comprehensive overview of DAG writing features with plenty of example code. You’ll learn how to: Understand the building blocks DAGs, combine them in complex pipelines, and schedule your DAG to run exactly when you want it to Write DAGs that adapt to your data at runtime and set up alerts and notifications Scale you

Cloudera

OCTOBER 14, 2020

Background. Why choose K8s for Apache Spark. Apache Spark unifies batch processing, real-time processing, stream analytics, machine learning, and interactive query in one-platform. While Apache Spark provides a lot of capabilities to support diversified use cases, it comes with additional complexity and high maintenance costs for cluster administrators.

Rock the JVM

OCTOBER 21, 2020

Akka Typed has transformed actor creation: in this article, we explore various methods for managing state within Akka actors

Teradata

OCTOBER 10, 2020

Successful companies need to squeeze maximum value from all of their data & do it at the lowest possible cost. But they often get hit with unexpected budget overruns. Teradata can help.

Netflix Tech

OCTOBER 30, 2020

Part of our series on who works in Analytics at Netflix?—?and what the role entails by Rocio Ruelas Back when we were all working in offices, my favorite days were Monday, Wednesday, and Friday. Those were the days with the best hot breakfast, and I’ve always been a sucker for free food. I started the day by arriving at the LA office right before 8am and finding a parking spot close to the entrance.

Advertisement

Many software teams have migrated their testing and production workloads to the cloud, yet development environments often remain tied to outdated local setups, limiting efficiency and growth. This is where Coder comes in. In our 101 Coder webinar, you’ll explore how cloud-based development environments can unlock new levels of productivity. Discover how to transition from local setups to a secure, cloud-powered ecosystem with ease.

Data Engineering Podcast

OCTOBER 5, 2020

Summary Analytical workloads require a well engineered and well maintained data integration process to ensure that your information is reliable and up to date. Building a real-time pipeline for your data lakes and data warehouses is a non-trivial effort, requiring a substantial investment of time and energy. Meroxa is a new platform that aims to automate the heavy lifting of change data capture, monitoring, and data loading.

Confluent

OCTOBER 13, 2020

All around the world, companies are asking the same question: What is happening right now? We are inundated with pieces of data that have a fragment of the answer. But […].

Cloudera

OCTOBER 13, 2020

Recently, my colleague published a blog build on your investment by Migrating or Upgrading to CDP Data Center , which articulates great CDP Private Cloud Base features. Existing CDH and HDP customers can immediately benefit from this new functionality. This blog focuses on the process to accelerate your CDP journey to CDP Private Cloud Base for both professional services engagements and self-service upgrades.

Grouparoo

OCTOBER 15, 2020

Two of the major components of the @grouparoo/core application are a Node.js API server and a React frontend. We use Actionhero as the API server, and Next.JS for our React site generator. As we develop the Grouparoo application, we are constantly adding new API endpoints and changing existing ones. One of the great features of Typescript is that it can help not only to share type definitions within a codebase, but also across multiple codebases or services.

Advertisement

Large enterprises face unique challenges in optimizing their Business Intelligence (BI) output due to the sheer scale and complexity of their operations. Unlike smaller organizations, where basic BI features and simple dashboards might suffice, enterprises must manage vast amounts of data from diverse sources. What are the top modern BI use cases for enterprise businesses to help you get a leg up on the competition?

Teradata

OCTOBER 5, 2020

Teradata Vantage on Google Cloud is now generally available! Vantage on Google Cloud is an as-a-service offer in which customers can get the most analytic value from their data. Read more.

Rock the JVM

OCTOBER 11, 2020

Broadcast joins in Apache Spark are a highly effective technique for boosting performance and avoiding memory issues, offering great value for optimization

Domino Data Lab: Data Engineering

OCTOBER 6, 2020

Danger of Big Data Big data is the rage. This could be lots of rows (samples) and few columns (variables) like credit card transaction data, or lots of columns (variables) and few rows (samples) like genomic sequencing in life sciences research. The Curse of Dimensionality , or Large P, Small N, ((P >> N)) problem applies to the latter case of lots of variables measured on a relatively few number of samples.

Confluent

OCTOBER 2, 2020

Stream processing applications, including streaming ETL pipelines, materialized caches, and event-driven microservices, are made easy with ksqlDB. Until recently, your options for interacting with ksqlDB were limited to its command-line […].

Speaker: Tamara Fingerlin, Developer Advocate

In this new webinar, Tamara Fingerlin, Developer Advocate, will walk you through many Airflow best practices and advanced features that can help you make your pipelines more manageable, adaptive, and robust. She'll focus on how to write best-in-class Airflow DAGs using the latest Airflow features like dynamic task mapping and data-driven scheduling!

Let's personalize your content