How to Correctly Select a Sample From a Huge Dataset in Machine Learning

KDnuggets

SEPTEMBER 28, 2022

We explain how choosing a small, representative dataset from a large population can improve model training reliability.

KDnuggets

SEPTEMBER 28, 2022

We explain how choosing a small, representative dataset from a large population can improve model training reliability.

Simon Späti

SEPTEMBER 30, 2022

Image by Rachel Claire on Pexels Ever wanted or been asked to build an open-source Data Lake offloading data for analytics? Asked yourself what components and features would that include. Didn’t know the difference between a Data Lakehouse and a Data Warehouse? Or you just wanted to govern your hundreds to thousands of files and have more database-like features but don’t know how?

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Marc Lamberti

SEPTEMBER 18, 2022

Airflow Taskflow is a new way of writing DAGs at ease. As you will see, you need to write fewer lines than before to obtain the same DAG. That helps to make DAGs easier to build, read, and maintain. The Taskflow API has three main aspects: XCOM Args, Decorator, and XCOM backends. In this tutorial, you will learn what the Taskflow API is, why it is crucial for you, and how to create your DAGs.

Data Engineering Podcast

SEPTEMBER 11, 2022

Summary Any business that wants to understand their operations and customers through data requires some form of pipeline. Building reliable data pipelines is a complex and costly undertaking with many layered requirements. In order to reduce the amount of time and effort required to build pipelines that power critical insights Manish Jethani co-founded Hevo Data.

Advertisement

In Airflow, DAGs (your data pipelines) support nearly every use case. As these workflows grow in complexity and scale, efficiently identifying and resolving issues becomes a critical skill for every data engineer. This is a comprehensive guide with best practices and examples to debugging Airflow DAGs. You’ll learn how to: Create a standardized process for debugging to quickly diagnose errors in your DAGs Identify common issues with DAGs, tasks, and connections Distinguish between Airflow-relate

Confluent

SEPTEMBER 14, 2022

How gaming enterprises like Sony and Big Fish Games use Apache Kafka®, Confluent, and ksqlDB’s data streaming technologies for the best in-game experience, ROI, and real-time capabilities.

U-Next

SEPTEMBER 27, 2022

Introduction . Cybersecurity or computer security and information security is the act of preventing theft, damage, loss, or unauthorized access to computers, networks, and data. As our interconnections grow, so do the chances for evil hackers to steal, destroy, or disrupt our lives. The increase in cybercrime has increased the demand for cybersecurity expertise.

Data Engineering Digest brings together the best content for data engineering professionals from the widest variety of industry thought leaders.

Simon Späti

SEPTEMBER 29, 2022

A semantic layer is something we use every day. We build dashboards with yearly and monthly aggregations. We design dimensions for drilling down reports by region, product, or whatever metrics we are interested in. What has changed is that we no longer use a singular business intelligence tool; different teams use different visualizations (BI, notebooks, and embedded analytics).

Cloudera

SEPTEMBER 7, 2022

We are now well into 2022 and the megatrends that drove the last decade in data — The Apache Software Foundation as a primary innovation vehicle for big data, the arrival of cloud computing, and the debut of cheap distributed storage — have now converged and offer clear patterns for competitive advantage for vendors and value for customers. Cloudera has been parlaying those patterns into clear wins for the community at large and, more importantly, streamlining the benefits of that innovation to

Data Engineering Podcast

SEPTEMBER 25, 2022

Summary Regardless of how data is being used, it is critical that the information is trusted. The practice of data reliability engineering has gained momentum recently to address that question. To help support the efforts of data teams the folks at Soda Data created the Soda Checks Language and the corresponding Soda Core utility that acts on this new DSL.

Confluent

SEPTEMBER 20, 2022

Microservices have numerous benefits, but data silos are incredibly challenging. Learn how Kafka Connect and CDC provide real-time database synchronization, bridging data silos between all microservice applications.

Speaker: Tamara Fingerlin, Developer Advocate

Apache Airflow® 3.0, the most anticipated Airflow release yet, officially launched this April. As the de facto standard for data orchestration, Airflow is trusted by over 77,000 organizations to power everything from advanced analytics to production AI and MLOps. With the 3.0 release, the top-requested features from the community were delivered, including a revamped UI for easier navigation, stronger security, and greater flexibility to run tasks anywhere at any time.

Teradata

SEPTEMBER 30, 2022

With the release of VantageCloud Lake and ClearScape Analytics, Teradata brings a cloud-native architecture to extend the technical innovations and differentiators that Vantage is well known for.

KDnuggets

SEPTEMBER 22, 2022

How to increase your chances of avoiding the mistake.

Simon Späti

SEPTEMBER 29, 2022

A semantic layer is something we use every day. We build dashboards with yearly and monthly aggregations. We design dimensions for drilling down reports by region, product, or whatever metrics we are interested in. What has changed is that we no longer use a singular business intelligence tool; different teams use different visualizations (BI, notebooks, and embedded analytics).

Cloudera

SEPTEMBER 23, 2022

In a recent blog, Cloudera Chief Technology Officer Ram Venkatesh described the evolution of a data lakehouse, as well as the benefits of using an open data lakehouse, especially the open Cloudera Data Platform (CDP). If you missed it, you can read up about it here. Modern data lakehouses are typically deployed in the cloud. Cloud computing brings several distinct advantages that are core to the lakehouse value proposition.

Speaker: Alex Salazar, CEO & Co-Founder @ Arcade | Nate Barbettini, Founding Engineer @ Arcade | Tony Karrer, Founder & CTO @ Aggregage

There’s a lot of noise surrounding the ability of AI agents to connect to your tools, systems and data. But building an AI application into a reliable, secure workflow agent isn’t as simple as plugging in an API. As an engineering leader, it can be challenging to make sense of this evolving landscape, but agent tooling provides such high value that it’s critical we figure out how to move forward.

Data Engineering Podcast

SEPTEMBER 25, 2022

Summary Data integration from source systems to their downstream destinations is the foundational step for any data product. With the increasing expecation for information to be instantly accessible, it drives the need for reliable change data capture. The team at Fivetran have recently introduced that functionality to power real-time data products.

Confluent

SEPTEMBER 28, 2022

Highlighting sessions on the power of our Confluent-Google partnership: multi-layer data security, real-time cloud data streaming and analytics, database modernization, and more.

Teradata

SEPTEMBER 1, 2022

Financial services organizations that exhibit true data literacy avoid bottlenecks and instead choose to build best in class solutions that meet current and future needs. Find out more.

KDnuggets

SEPTEMBER 20, 2022

When building and optimizing your classification model, measuring how accurately it predicts your expected outcome is crucial. However, this metric alone is never the entire story, as it can still offer misleading results. That's where these additional performance evaluations come into play to help tease out more meaning from your model.

Speaker: Andrew Skoog, Founder of MachinistX & President of Hexis Representatives

Manufacturing is evolving, and the right technology can empower—not replace—your workforce. Smart automation and AI-driven software are revolutionizing decision-making, optimizing processes, and improving efficiency. But how do you implement these tools with confidence and ensure they complement human expertise rather than override it? Join industry expert Andrew Skoog as he explores how manufacturers can leverage automation to enhance operations, streamline workflows, and make smarter, data-dri

Netflix Tech

SEPTEMBER 29, 2022

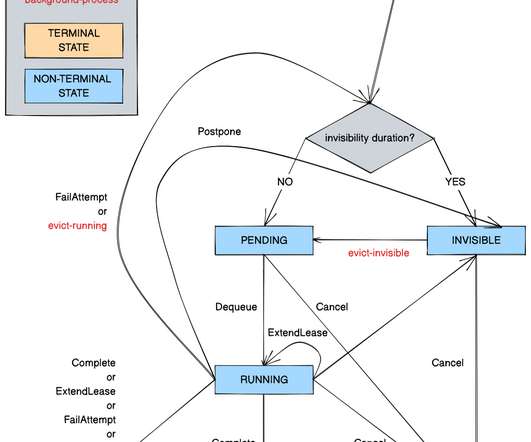

Timestone: Netflix’s High-Throughput, Low-Latency Priority Queueing System with Built-in Support for Non-Parallelizable Workloads by Kostas Christidis Introduction Timestone is a high-throughput, low-latency priority queueing system we built in-house to support the needs of Cosmos , our media encoding platform. Over the past 2.5 years, its usage has increased, and Timestone is now also the priority queueing engine backing Conductor , our general-purpose workflow orchestration engine, and BDP Sch

Cloudera

SEPTEMBER 9, 2022

The promise of a modern data lakehouse architecture. Imagine having self-service access to all business data, anywhere it may be, and being able to explore it all at once. Imagine quickly answering burning business questions nearly instantly, without waiting for data to be found, shared, and ingested. Imagine independently discovering rich new business insights from both structured and unstructured data working together, without having to beg for data sets to be made available.

Data Engineering Podcast

SEPTEMBER 18, 2022

Summary In order to improve efficiency in any business you must first know what is contributing to wasted effort or missed opportunities. When your business operates across multiple locations it becomes even more challenging and important to gain insights into how work is being done. In this episode Tommy Yionoulis shares his experiences working in the service and hospitality industries and how that led him to found OpsAnalitica, a platform for collecting and analyzing metrics on multi location

Confluent

SEPTEMBER 21, 2022

A deep dive into how microservices work, why it’s the backbone of real-time applications, and how to build event-driven microservices applications with Python and Kafka.

Advertisement

Many software teams have migrated their testing and production workloads to the cloud, yet development environments often remain tied to outdated local setups, limiting efficiency and growth. This is where Coder comes in. In our 101 Coder webinar, you’ll explore how cloud-based development environments can unlock new levels of productivity. Discover how to transition from local setups to a secure, cloud-powered ecosystem with ease.

dbt Developer Hub

SEPTEMBER 7, 2022

If you’ve ever heard of Marie Kondo, you’ll know she has an incredibly soothing and meditative method to tidying up physical spaces. Her KonMari Method is about categorizing, discarding unnecessary items, and building a sustainable system for keeping stuff. As an analytics engineer at your company, doesn’t that last sentence describe your job perfectly?!

KDnuggets

SEPTEMBER 16, 2022

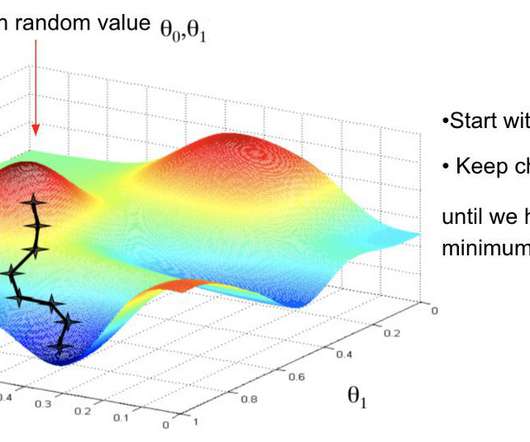

Why is Gradient Descent so important in Machine Learning? Learn more about this iterative optimization algorithm and how it is used to minimize a loss function.

U-Next

SEPTEMBER 30, 2022

Show, don’t tell is what people tell writers and screenwriters, but this is practically applicable to aspirants who want to land their dream jobs as well. Apart from your university degree and professional certifications, what adds compelling weightage to your candidacy is a solid portfolio. . A portfolio is like your business card and regardless of whether you are a fresher or someone experienced, moving up the corporate ladder, a portfolio is what will ensure you a job, that higher paycheck a

Cloudera

SEPTEMBER 22, 2022

Insurance carriers are always looking to improve operational efficiency. We’ve previously highlighted opportunities to improve digital claims processing with data and AI. In this post, I’ll explore opportunities to enhance risk assessment and underwriting, especially in personal lines and small and medium-sized enterprises. Underwriting is an area that can yield improvements by applying the old saying “work smarter, not harder.

Advertisement

Large enterprises face unique challenges in optimizing their Business Intelligence (BI) output due to the sheer scale and complexity of their operations. Unlike smaller organizations, where basic BI features and simple dashboards might suffice, enterprises must manage vast amounts of data from diverse sources. What are the top modern BI use cases for enterprise businesses to help you get a leg up on the competition?

Data Engineering Podcast

SEPTEMBER 18, 2022

Summary There is a constant tension in business data between growing siloes, and breaking them down. Even when a tool is designed to integrate information as a guard against data isolation, it can easily become a silo of its own, where you have to make a point of using it to seek out information. In order to help distribute critical context about data assets and their status into the locations where work is being done Nicholas Freund co-founded Workstream.

Confluent

SEPTEMBER 12, 2022

How banks and finance companies use Confluent to transform their digital systems with event-driven architecture, real-time payment processing, fraud detection, and analytics.

dbt Developer Hub

SEPTEMBER 12, 2022

Why do people cherry pick into upper branches? The simplest branching strategy for making code changes to your dbt project repository is to have a single main branch with your production-level code. To update the main branch, a developer will: Create a new feature branch directly from the main branch Make changes on said feature branch Test locally When ready, open a pull request to merge their changes back into the main branch If you are just getting started in dbt and deciding which branchin

KDnuggets

SEPTEMBER 13, 2022

This article will go over the top 5 data science skills that pay you and 5 that don’t.

Advertisement

With Airflow being the open-source standard for workflow orchestration, knowing how to write Airflow DAGs has become an essential skill for every data engineer. This eBook provides a comprehensive overview of DAG writing features with plenty of example code. You’ll learn how to: Understand the building blocks DAGs, combine them in complex pipelines, and schedule your DAG to run exactly when you want it to Write DAGs that adapt to your data at runtime and set up alerts and notifications Scale you

Let's personalize your content