Data-driven 2021: Predictions for a new year in data, analytics and AI

DataKitchen

JANUARY 4, 2021

The post Data-driven 2021: Predictions for a new year in data, analytics and AI first appeared on DataKitchen.

DataKitchen

JANUARY 4, 2021

The post Data-driven 2021: Predictions for a new year in data, analytics and AI first appeared on DataKitchen.

Confluent

JANUARY 26, 2021

Today, I am thrilled to announce a new strategic alliance with Microsoft to enable a seamless, integrated experience between Confluent Cloud and the Azure platform. This represents a significant milestone […].

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Cloudera

JANUARY 6, 2021

Introduction. Python is used extensively among Data Engineers and Data Scientists to solve all sorts of problems from ETL/ELT pipelines to building machine learning models. Apache HBase is an effective data storage system for many workflows but accessing this data specifically through Python can be a struggle. For data professionals that want to make use of data stored in HBase the recent upstream project “hbase-connectors” can be used with PySpark for basic operations.

Teradata

JANUARY 5, 2021

By leveraging data to create a 360 degree view of its citizenry, government agencies can create more optimal experiences & improve outcomes such as closing the tax gap or improving quality of care.

Advertisement

In Airflow, DAGs (your data pipelines) support nearly every use case. As these workflows grow in complexity and scale, efficiently identifying and resolving issues becomes a critical skill for every data engineer. This is a comprehensive guide with best practices and examples to debugging Airflow DAGs. You’ll learn how to: Create a standardized process for debugging to quickly diagnose errors in your DAGs Identify common issues with DAGs, tasks, and connections Distinguish between Airflow-relate

Start Data Engineering

JANUARY 30, 2021

Introduction Setup Problems with a single large update Updating in batches Conclusion Further reading Introduction When updating a large number of records in an OLTP database, such as MySQL, you have to be mindful about locking the records. If those records are locked, they will not be editable(update or delete) by other transactions on your database.

François Nguyen

JANUARY 29, 2021

The agile part of DataOps In this previous article, we have defined dataops as a”combination of tools and methods inspired by Agile, Devops and Lean Manufacturing » (thanks to DataKitchen for this definition). Let’s focus on the agile part and why it is so relevant for your data use cases. “Data is like a box of chocolates, you never know what you’re gonna get.” It is about the nature of data : you cannot guess what will be the content and the quality of your data sources

Data Engineering Digest brings together the best content for data engineering professionals from the widest variety of industry thought leaders.

Confluent

JANUARY 20, 2021

Apache Kafka® is at the core of a large ecosystem that includes powerful components, such as Kafka Connect and Kafka Streams. This ecosystem also includes many tools and utilities that […].

Cloudera

JANUARY 20, 2021

In this last installment, we’ll discuss a demo application that uses PySpark.ML to make a classification model based off of training data stored in both Cloudera’s Operational Database (powered by Apache HBase) and Apache HDFS. Afterwards, this model is then scored and served through a simple Web Application. For more context, this demo is based on concepts discussed in this blog post How to deploy ML models to production.

Netflix Tech

JANUARY 22, 2021

By Phill Williams and Vijay Gondi Introduction At Netflix, we are passionate about delivering great audio to our members. We began streaming 5.1 channel surround sound in 2010, Dolby Atmos in 2017 , and adaptive bitrate audio in 2019. Continuing in this tradition, we are proud to announce that Netflix now streams Extended HE-AAC with MPEG-D DRC ( xHE-AAC ) to compatible Android Mobile devices (Android 9 and newer).

Start Data Engineering

JANUARY 16, 2021

Introduction Setup Code Conditional logic to read from mock input Custom macro to test for equality Setup environment specific test Run ELT using dbt Conclusion Further reading Introduction With the recent advancements in data warehouses and tools like dbt most transformations(T of ELT) are being done directly in the data warehouse. While this provides a lot of functionality out of the box, it gets tricky when you want to test your sql code locally before deploying to production.

Speaker: Tamara Fingerlin, Developer Advocate

Apache Airflow® 3.0, the most anticipated Airflow release yet, officially launched this April. As the de facto standard for data orchestration, Airflow is trusted by over 77,000 organizations to power everything from advanced analytics to production AI and MLOps. With the 3.0 release, the top-requested features from the community were delivered, including a revamped UI for easier navigation, stronger security, and greater flexibility to run tasks anywhere at any time.

François Nguyen

JANUARY 23, 2021

or why you should have a look at Data Observability ! This article is the second part on how Airbnb is managing data quality : “Part 2 — A New Gold Standard” The first part can be found here and it was just good principles about roles and responsabilities. The second part is really how they do it and all the steps to have a “certification” They name it Midas, the famous king who can turn everything into gold ( with a not so good ending ).

Team Data Science

JANUARY 8, 2021

Big Data has become the dominant innovation in all high-performing companies. Notable businesses today focus their decision-making capabilities on knowledge gained from the study of big data. Big Data is a collection of large data sets, particularly from new sources, providing an array of possibilities for those who want to work with data and are enthusiastic about unraveling trends in rows of new, unstructured data.

Confluent

JANUARY 12, 2021

Confluent uses property-based testing to test various aspects of Confluent Server’s Tiered Storage feature. Tiered Storage shifts data from expensive local broker disks to cheaper, scalable object storage, thereby reducing […].

Cloudera

JANUARY 20, 2021

Digital transformation is a hot topic for all markets and industries as it’s delivering value with explosive growth rates. Consider that Manufacturing’s Industry Internet of Things (IIOT) was valued at $161b with an impressive 25% growth rate, the Connected Car market will be valued at $225b by 2027 with a 17% growth rate, or that in the first three months of 2020, retailers realized ten years of digital sales penetration in just three months.

Speaker: Alex Salazar, CEO & Co-Founder @ Arcade | Nate Barbettini, Founding Engineer @ Arcade | Tony Karrer, Founder & CTO @ Aggregage

There’s a lot of noise surrounding the ability of AI agents to connect to your tools, systems and data. But building an AI application into a reliable, secure workflow agent isn’t as simple as plugging in an API. As an engineering leader, it can be challenging to make sense of this evolving landscape, but agent tooling provides such high value that it’s critical we figure out how to move forward.

Data Engineering Podcast

JANUARY 25, 2021

Summary Businesses often need to be able to ingest data from their customers in order to power the services that they provide. For each new source that they need to integrate with it is another custom set of ETL tasks that they need to maintain. In order to reduce the friction involved in supporting new data transformations David Molot and Hassan Syyid built the Hotlue platform.

Start Data Engineering

JANUARY 6, 2021

What is backfilling ? Setup Prerequisites Apache Airflow - Execution Day Backfill Conclusion Further Reading References What is backfilling ? Backfilling refers to any process that involves modifying or adding new data to existing records in a dataset. This is a common use case in data engineering. Some examples can be a change in some business logic may need to be applied to an already processed dataset.

François Nguyen

JANUARY 18, 2021

To finish the trilogy (Dataops, MLops), let’s talk about DataGovOps or how you can support your Data Governance initiative. The origin of the term : Datakitchen We must give credit to Chris Bergh and his team DataKictchen. You should visit their website , you will find incredible good stuff there. This article was published in October 2020 with this title : “Data Governance as Code” The idea behind that is you should “actively promotes the safe use of data with automation

Teradata

JANUARY 24, 2021

To satisfy the evolving demands of customers, Chief Commercial Officers need to wield big data in Retail & CPG using a precise scalpel rather than a blunt axe.

Speaker: Andrew Skoog, Founder of MachinistX & President of Hexis Representatives

Manufacturing is evolving, and the right technology can empower—not replace—your workforce. Smart automation and AI-driven software are revolutionizing decision-making, optimizing processes, and improving efficiency. But how do you implement these tools with confidence and ensure they complement human expertise rather than override it? Join industry expert Andrew Skoog as he explores how manufacturers can leverage automation to enhance operations, streamline workflows, and make smarter, data-dri

Confluent

JANUARY 7, 2021

At Zendesk, Apache Kafka® is one of our foundational services for distributing events among different internal systems. We have pods, which can be thought of as isolated cloud environments where […].

Cloudera

JANUARY 7, 2021

Text classification is a ubiquitous capability with a wealth of use cases. For example, recommendation systems rely on properly classifying text content such as news articles or product descriptions in order to provide users with the most relevant information. Classifying user-generated content allows for more nuanced sentiment analysis. And in the world of e-commerce, assigning product descriptions to the most fitting product category ensures quality control. .

Data Engineering Podcast

JANUARY 18, 2021

Summary The data warehouse has become the central component of the modern data stack. Building on this pattern, the team at Hightouch have created a platform that synchronizes information about your customers out to third party systems for use by marketing and sales teams. In this episode Tejas Manohar explains the benefits of sourcing customer data from one location for all of your organization to use, the technical challenges of synchronizing the data to external systems with varying APIs, and

Start Data Engineering

JANUARY 1, 2021

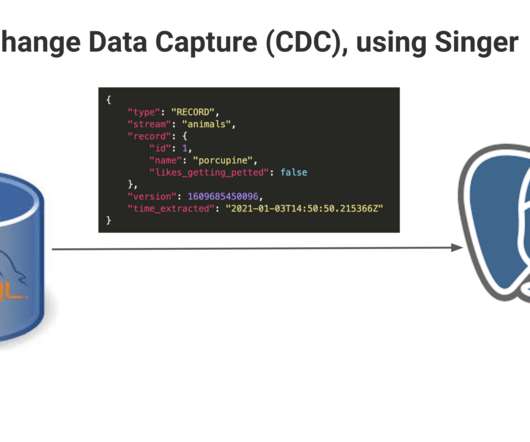

Introduction Why Change Data Capture Setup Prerequisites Source setup Destination setup Source, MySQL CDC, MySQL => PostgreSQL Pros and Cons Pros Cons Conclusion References Introduction Change data capture is a software design pattern used to track every change(update, insert, delete) to the data in a database. In most databases these types of changes are added to an append only log (Binlog in MySQL, Write Ahead Log in PostgreSQL).

Advertisement

Many software teams have migrated their testing and production workloads to the cloud, yet development environments often remain tied to outdated local setups, limiting efficiency and growth. This is where Coder comes in. In our 101 Coder webinar, you’ll explore how cloud-based development environments can unlock new levels of productivity. Discover how to transition from local setups to a secure, cloud-powered ecosystem with ease.

DataKitchen

JANUARY 6, 2021

Savvy executives maximize the value of every budgeted dollar. Decisions to invest in new tools and methods must be backed up with a strong business case. As data professionals, we know the value and impact of DataOps: streamlining analytics workflows, reducing errors, and improving data operations transparency. Being able to quantify the value and impact helps leadership understand the return on past investments and supports alignment with future enterprise DataOps transformation initiatives.

Teradata

JANUARY 3, 2021

Digital payments generate 90% of financial institutions’ useful customer data. How can they exploit its value? Find out more.

Confluent

JANUARY 29, 2021

What happens when you let engineers go wild building an application to track the score of a table football game? This blog post shares SPOUD’s story of engineering a simple […].

Cloudera

JANUARY 18, 2021

2020 put on full display how humanity shows up in times of hardship. We saw everything from street celebrations to usher weary medical personnel home after long days fighting to save lives to places like food banks receiving more donations and volunteers than ever before. Some communities were harder hit than others, and we’ve seen the same in the global workplace.

Advertisement

Large enterprises face unique challenges in optimizing their Business Intelligence (BI) output due to the sheer scale and complexity of their operations. Unlike smaller organizations, where basic BI features and simple dashboards might suffice, enterprises must manage vast amounts of data from diverse sources. What are the top modern BI use cases for enterprise businesses to help you get a leg up on the competition?

Data Engineering Podcast

JANUARY 11, 2021

Summary As data professionals we have a number of tools available for storing, processing, and analyzing data. We also have tools for collaborating on software and analysis, but collaborating on data is still an underserved capability. Gavin Mendel-Gleason encountered this problem first hand while working on the Sesshat databank, leading him to create TerminusDB and TerminusHub.

Monte Carlo

JANUARY 28, 2021

I’ve never done Sales before. Most of my professional history was spent working for a Customer Success company, Gainsight, where I provided the data, insights, and tools for our customer-facing teams. At Gainsight, my bonus was tied to 1) verified customer outcomes, 2) renewal rate, and 3) customer advocacy. I learned to be maniacally customer-focused.

DataKitchen

JANUARY 20, 2021

As DataOps activity takes root within an enterprise, managers face the question of whether to build centralized or decentralized DataOps capabilities. Centralizing analytics brings it under control but granting analysts free reign is necessary to foster innovation and stay competitive. The beauty of DataOps is that you don’t have to choose between centralization and freedom.

Teradata

JANUARY 28, 2021

This post shines a spotlight on the difference between what you already know & the more specific guidance you really need to create a successful data strategy.

Advertisement

With Airflow being the open-source standard for workflow orchestration, knowing how to write Airflow DAGs has become an essential skill for every data engineer. This eBook provides a comprehensive overview of DAG writing features with plenty of example code. You’ll learn how to: Understand the building blocks DAGs, combine them in complex pipelines, and schedule your DAG to run exactly when you want it to Write DAGs that adapt to your data at runtime and set up alerts and notifications Scale you

Let's personalize your content