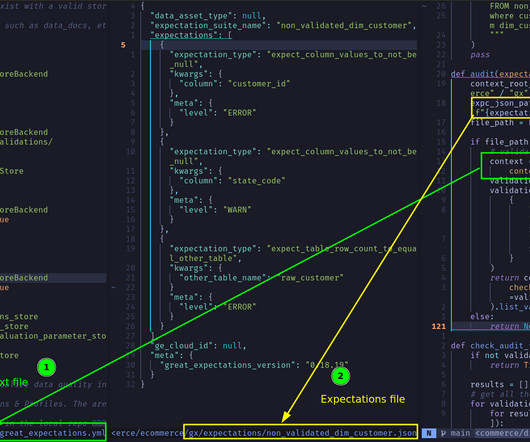

How to implement data quality checks with greatexpectations

Start Data Engineering

JULY 26, 2024

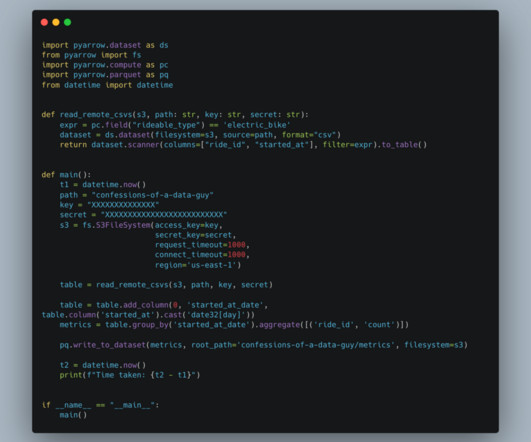

1. Introduction 2. Project overview 3. Check your data before making it available to end-users; Write-Audit-Publish(WAP) pattern 4. TL;DR: How the greatexpectations library works 4.1. greatexpectations quick setup 5. From an implementation perspective, there are four types of tests 5.1. Running checks on one dataset 5.2. Checks involving the current dataset and its historical data 5.3.

Let's personalize your content