5 Quirky Data Science Projects to Impress

KDnuggets

SEPTEMBER 12, 2024

Develop unique yet standing-out data science projects to improve your data portfolio.

KDnuggets

SEPTEMBER 12, 2024

Develop unique yet standing-out data science projects to improve your data portfolio.

Analytics Vidhya

SEPTEMBER 12, 2024

Introduction Imagine yourself as a data professional tasked with creating an efficient data pipeline to streamline processes and generate real-time information. Sounds challenging, right? That’s where Mage AI comes in to ensure that the lenders operating online gain a competitive edge. Picture this: thus, unlike many other extensions that require deep setup and constant coding, […] The post Setup Mage AI with Postgres to Build and Manage Your Data Pipeline appeared first on Analytics Vidhy

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Confluent

SEPTEMBER 9, 2024

Confluent has acquired WarpStream, an innovative Kafka-compatible streaming solution. Read the full statement by Jay Kreps, co-founder and CEO of Confluent.

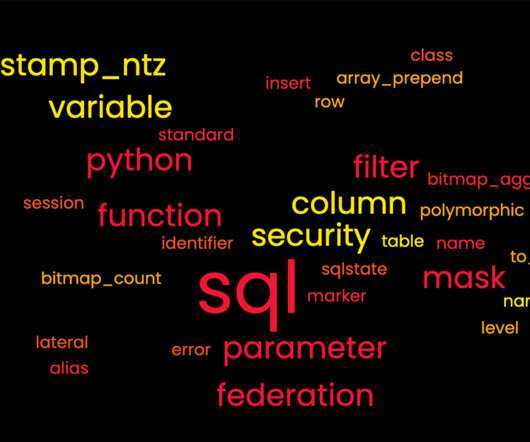

databricks

SEPTEMBER 10, 2024

We are excited to share the latest new features and performance improvements that make Databricks SQL simpler, faster and lower cost than ever.

Speaker: Tamara Fingerlin, Developer Advocate

Apache Airflow® 3.0, the most anticipated Airflow release yet, officially launched this April. As the de facto standard for data orchestration, Airflow is trusted by over 77,000 organizations to power everything from advanced analytics to production AI and MLOps. With the 3.0 release, the top-requested features from the community were delivered, including a revamped UI for easier navigation, stronger security, and greater flexibility to run tasks anywhere at any time.

KDnuggets

SEPTEMBER 12, 2024

The GitHub repository includes up-to-date learning resources, research papers, guides, popular tools, tutorials, projects, and datasets.

Engineering at Meta

SEPTEMBER 10, 2024

We’re sharing more about the role that reinforcement learning plays in helping us optimize our data centers’ environmental controls. Our reinforcement learning-based approach has helped us reduce energy consumption and water usage across various weather conditions. Meta is revamping its new data center design to optimize for artificial intelligence and the same methodology will be applicable for future data center optimizations as well.

Data Engineering Digest brings together the best content for data engineering professionals from the widest variety of industry thought leaders.

databricks

SEPTEMBER 11, 2024

We are excited to announce that Databricks was named one of the 2024 Fortune Best Workplaces in Technology™. This award reflects our.

KDnuggets

SEPTEMBER 11, 2024

Kickstart your data analyst career with all these free courses.

Confessions of a Data Guy

SEPTEMBER 13, 2024

Did you know there are only 3 types of Data Engineers? It’s true. I hope you are the right one. The post The 3 Types of Data Engineers. appeared first on Confessions of a Data Guy.

Christophe Blefari

SEPTEMBER 13, 2024

Back to work ( credits ) Hey you, can you believe it's already September? This year has been flying. It feels like I just blinked, and here we are. In August, I've been focusing mainly on my next big journey—if you follow me on LinkedIn, you might have caught a sneak peek! I'll be making a full announcement next week. I want to take the time to explain my thought process and ideas behind it.

Speaker: Alex Salazar, CEO & Co-Founder @ Arcade | Nate Barbettini, Founding Engineer @ Arcade | Tony Karrer, Founder & CTO @ Aggregage

There’s a lot of noise surrounding the ability of AI agents to connect to your tools, systems and data. But building an AI application into a reliable, secure workflow agent isn’t as simple as plugging in an API. As an engineering leader, it can be challenging to make sense of this evolving landscape, but agent tooling provides such high value that it’s critical we figure out how to move forward.

databricks

SEPTEMBER 11, 2024

Personal Access Tokens (PATs) are a convenient way to access services like Azure Databricks or Azure DevOps without logging in with your password.

KDnuggets

SEPTEMBER 9, 2024

Exploring the not-so-famous data science libraries that can be useful in your data workflow.

Snowflake

SEPTEMBER 12, 2024

The Snowflake World Tour is making 23 stops around the globe, so you can learn about the latest innovations in the AI Data Cloud in a city near you. This tour will cover Snowflake’s latest advancements that can help you accelerate AI and application development in your organization while advancing the data foundation that makes it all possible. This includes new capabilities related to Snowflake Cortex, streaming, Iceberg open table formats and more.

Striim

SEPTEMBER 11, 2024

Data pipelines are the backbone of your business’s data architecture. Implementing a robust and scalable pipeline ensures you can effectively manage, analyze, and organize your growing data. Most importantly, these pipelines enable your team to transform data into actionable insights, demonstrating tangible business value. According to an IBM study, businesses expect that fast data will enable them to “make better informed decisions using insights from analytics (44%), improved data quality and

Speaker: Andrew Skoog, Founder of MachinistX & President of Hexis Representatives

Manufacturing is evolving, and the right technology can empower—not replace—your workforce. Smart automation and AI-driven software are revolutionizing decision-making, optimizing processes, and improving efficiency. But how do you implement these tools with confidence and ensure they complement human expertise rather than override it? Join industry expert Andrew Skoog as he explores how manufacturers can leverage automation to enhance operations, streamline workflows, and make smarter, data-dri

databricks

SEPTEMBER 9, 2024

Imagine giving your business an intelligent bot to talk to customers. Chatbots are commonly used to talk to customers and provide them with.

KDnuggets

SEPTEMBER 10, 2024

Learn about machine learning APIs for datasets, models, web applications, free GPUs, and text, audio, and image generation.

Snowflake

SEPTEMBER 13, 2024

Snowflake has always been committed to helping customers protect their accounts and data. To further our commitment to protect against cybersecurity threats and to champion the advancement of industry standards for security, Snowflake recently signed the Cybersecurity and Infrastructure Security Agency (CISA) Secure By Design Pledge. In line with CISA’s Secure By Design principles, we recently announced a number of security enhancements in the platform — most notably the general availability of

Robinhood

SEPTEMBER 12, 2024

The Robinhood Environmental Social and Governance (“ESG”) program is central to our company mission of democratizing finance for all. Today we published our fourth ESG report to feature last year’s work and focus on today’s commitments to drive our mission and make a positive impact for our customers and the world around us. This year’s report describes our progress with ESG priorities for fiscal year 2023: Robinhood is dedicated to managing our environmental impact, including carbon emissions.

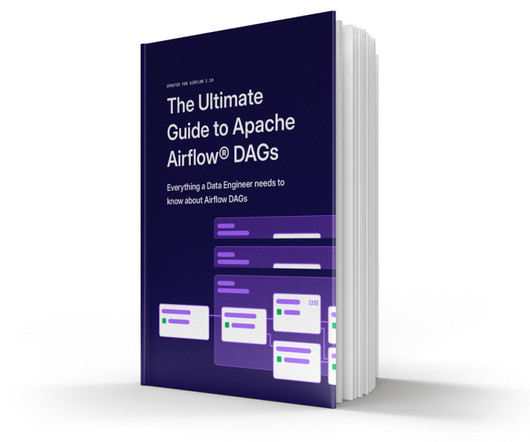

Advertisement

With Airflow being the open-source standard for workflow orchestration, knowing how to write Airflow DAGs has become an essential skill for every data engineer. This eBook provides a comprehensive overview of DAG writing features with plenty of example code. You’ll learn how to: Understand the building blocks DAGs, combine them in complex pipelines, and schedule your DAG to run exactly when you want it to Write DAGs that adapt to your data at runtime and set up alerts and notifications Scale you

databricks

SEPTEMBER 9, 2024

Personalization and scale have historically been mutually exclusive. For all the talk of one-to-one marketing and hyper-personalization , the reality has been that.

KDnuggets

SEPTEMBER 13, 2024

Learn how to use the OpenAI o1-preview & o1-mini for decision-making, coding, and building an end-to-end machine learning project from scratch.

Netflix Tech

SEPTEMBER 10, 2024

By Karthik Yagna , Baskar Odayarkoil , and Alex Ellis Pushy is Netflix’s WebSocket server that maintains persistent WebSocket connections with devices running the Netflix application. This allows data to be sent to the device from backend services on demand, without the need for continually polling requests from the device. Over the last few years, Pushy has seen tremendous growth, evolving from its role as a best-effort message delivery service to be an integral part of the Netflix ecosystem.

Monte Carlo

SEPTEMBER 12, 2024

You know what they say about rules: they’re meant to be broken. Or, when it comes to data quality, it’s more like they’re bound to be broken. Data breaks, that much is certain. The challenge is knowing when, where, and why it happens. For most data analysts, combating that means writing data rules – lots of data rules – to ensure your data products are accurate and reliable.

Speaker: Tamara Fingerlin, Developer Advocate

In this new webinar, Tamara Fingerlin, Developer Advocate, will walk you through many Airflow best practices and advanced features that can help you make your pipelines more manageable, adaptive, and robust. She'll focus on how to write best-in-class Airflow DAGs using the latest Airflow features like dynamic task mapping and data-driven scheduling!

databricks

SEPTEMBER 10, 2024

We are excited to announce the addition of three new integrations in Databricks Partner Connect—a centralized hub that allows you to integrate partner.

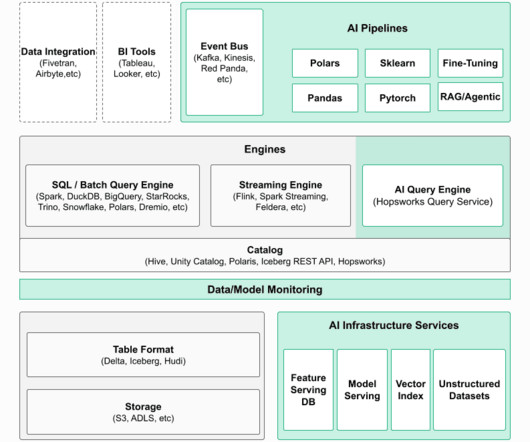

KDnuggets

SEPTEMBER 12, 2024

In order for Lakehouse to become a unified data layer for both analytics and AI, it needs to be extended with new capabilities

Towards Data Science

SEPTEMBER 11, 2024

One answer and many best practices for how larger organizations can operationalizing data quality programs for modern data platforms An answer to “who does what” for enterprise data quality. Image courtesy of the author. I’ve spoken with dozens of enterprise data professionals at the world’s largest corporations, and one of the most common data quality questions is, “who does what?

Tweag

SEPTEMBER 11, 2024

We’ve all been there: wasting a couple of days on a silly bug. Good news for you: formal methods have never been easier to leverage. In this post, I will discuss the contributions I made during my internship to Liquid Haskell (LH), a tool that makes proving that your Haskell code is correct a piece of cake. LH lets you write contracts for your functions inside your Haskell code.

Speaker: Ben Epstein, Stealth Founder & CTO | Tony Karrer, Founder & CTO, Aggregage

When tasked with building a fundamentally new product line with deeper insights than previously achievable for a high-value client, Ben Epstein and his team faced a significant challenge: how to harness LLMs to produce consistent, high-accuracy outputs at scale. In this new session, Ben will share how he and his team engineered a system (based on proven software engineering approaches) that employs reproducible test variations (via temperature 0 and fixed seeds), and enables non-LLM evaluation m

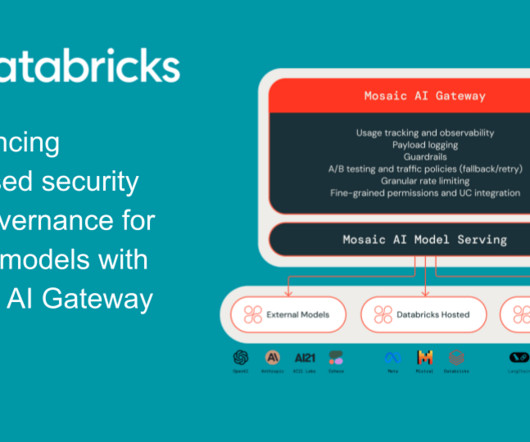

databricks

SEPTEMBER 9, 2024

We are excited to introduce several powerful new capabilities to Mosaic AI Gateway, designed to help our customers accelerate their AI initiatives with.

KDnuggets

SEPTEMBER 11, 2024

Access a pre-built Python environment with free GPUs, persistent storage, and large RAM. These Cloud IDEs include AI code assistants and numerous plugins for a fast and efficient development experience.

Confluent

SEPTEMBER 10, 2024

Engineers can put generative AI to work to improve the quality of their data, allowing them to build more accurate and trustworthy AI-powered applications.

DataKitchen

SEPTEMBER 9, 2024

From Cattle to Clarity: Visualizing Thousands of Data Pipelines with Violin Charts Most data teams work with a dozen or a hundred pipelines in production. What do you do when you have thousands of data pipelines in production? How do you understand what is happening to those pipelines? Is there a way that you can visualize what is happening in production quickly and easily?

Advertisement

Many software teams have migrated their testing and production workloads to the cloud, yet development environments often remain tied to outdated local setups, limiting efficiency and growth. This is where Coder comes in. In our 101 Coder webinar, you’ll explore how cloud-based development environments can unlock new levels of productivity. Discover how to transition from local setups to a secure, cloud-powered ecosystem with ease.

Let's personalize your content