12 Days of Apache Kafka

Confluent

DECEMBER 28, 2020

Before you say it: Yes, we are right now three days past Christmas, but technically the 12 days of Christmas refer to the days between Christmas and Epiphany, which is—I […].

Confluent

DECEMBER 28, 2020

Before you say it: Yes, we are right now three days past Christmas, but technically the 12 days of Christmas refer to the days between Christmas and Epiphany, which is—I […].

Start Data Engineering

JANUARY 1, 2021

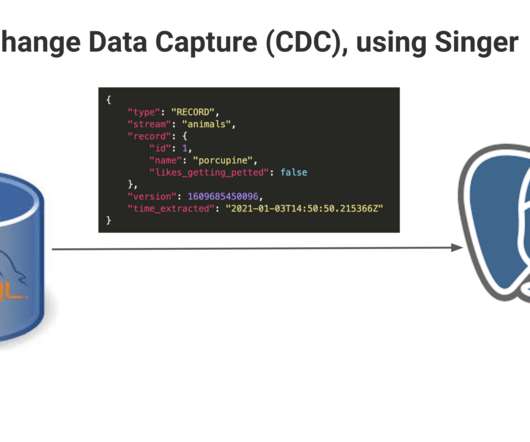

Introduction Why Change Data Capture Setup Prerequisites Source setup Destination setup Source, MySQL CDC, MySQL => PostgreSQL Pros and Cons Pros Cons Conclusion References Introduction Change data capture is a software design pattern used to track every change(update, insert, delete) to the data in a database. In most databases these types of changes are added to an append only log (Binlog in MySQL, Write Ahead Log in PostgreSQL).

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Data Engineering Podcast

DECEMBER 28, 2020

Summary One of the core responsibilities of data engineers is to manage the security of the information that they process. The team at Satori has a background in cybersecurity and they are using the lessons that they learned in that field to address the challenge of access control and auditing for data governance. In this episode co-founder and CTO Yoav Cohen explains how the Satori platform provides a proxy layer for your data, the challenges of managing security across disparate storage system

DataKitchen

DECEMBER 30, 2020

While 2020 has been a collectively difficult year, we want to take a moment to thank all of our employees for the hard work they put into continually developing our DataKitchen DataOps Platform for our customers. We also want to thank all of the data industry groups that have recognized our DataKitchen DataOps Platform and Transformation Advisory Services throughout the year.

Speaker: Tamara Fingerlin, Developer Advocate

Apache Airflow® 3.0, the most anticipated Airflow release yet, officially launched this April. As the de facto standard for data orchestration, Airflow is trusted by over 77,000 organizations to power everything from advanced analytics to production AI and MLOps. With the 3.0 release, the top-requested features from the community were delivered, including a revamped UI for easier navigation, stronger security, and greater flexibility to run tasks anywhere at any time.

Teradata

DECEMBER 29, 2020

When considering your organization's move to the cloud, it's imperative to understand what the cloud can and cannot do, and how to best leverage its benefits.

DareData

JANUARY 1, 2021

DareData is a Data and Artificial Intelligence consulting company working mostly for data-driven clients, whether enterprises or startups. Our mission is to democratize data and AI tools as we feel there is current imbalance in accessibility. We work to provide autonomous paths for every client to access and control data engineering and data science tools by building infrastructure and AI analytics and models.

Let's personalize your content