How to Transition from ETL Developer to Data Engineer?

ProjectPro

JUNE 6, 2025

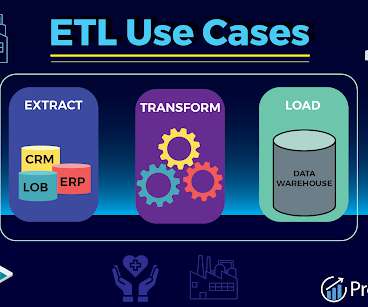

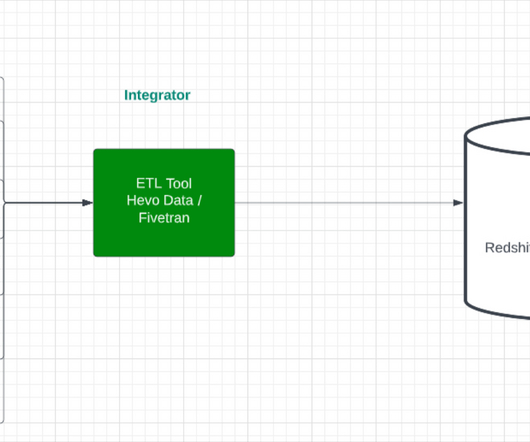

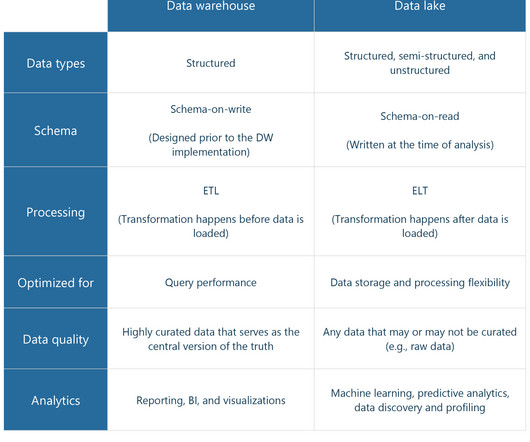

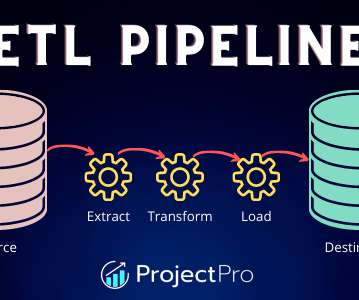

A traditional ETL developer comes from a software engineering background and typically has deep knowledge of ETL tools like Informatica, IBM DataStage, SSIS, etc. He is an expert SQL user and is well in both database management and data modeling techniques. What does ETL Developer Do?

Let's personalize your content