Best Practices for Analyzing Kafka Event Streams

Rockset

MARCH 5, 2020

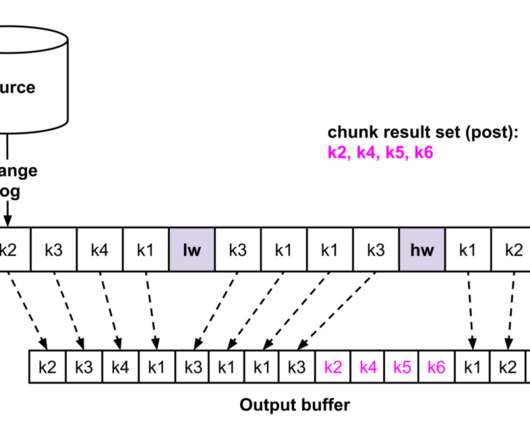

Apache Kafka has seen broad adoption as the streaming platform of choice for building applications that react to streams of data in real time. In many organizations, Kafka is the foundational platform for real-time event analytics, acting as a central location for collecting event data and making it available in real time.

Let's personalize your content