Data Architect: Role Description, Skills, Certifications and When to Hire

AltexSoft

FEBRUARY 11, 2023

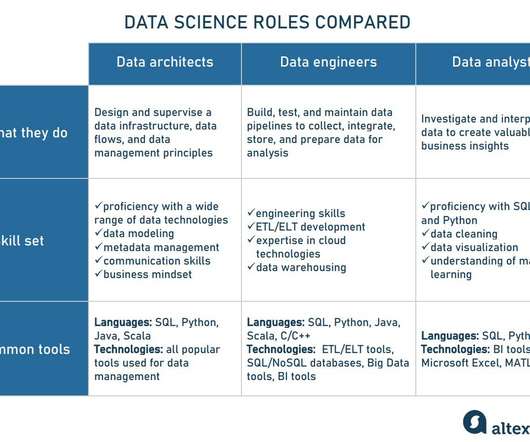

It serves as a foundation for the entire data management strategy and consists of multiple components including data pipelines; , on-premises and cloud storage facilities – data lakes , data warehouses , data hubs ;, data streaming and Big Data analytics solutions ( Hadoop , Spark , Kafka , etc.);

Let's personalize your content