Apache Ozone – A Multi-Protocol Aware Storage System

Cloudera

NOVEMBER 7, 2023

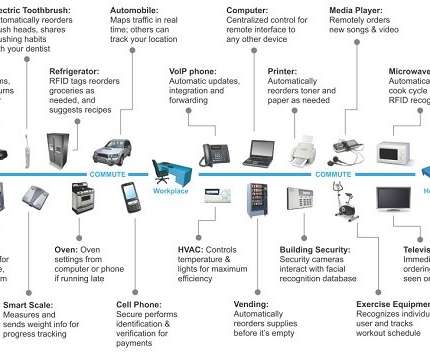

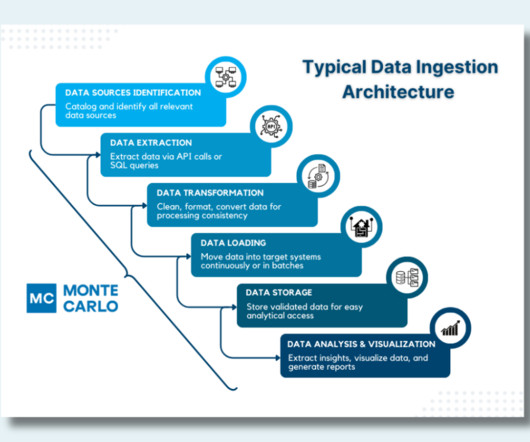

The vast tapestry of data types spanning structured, semi-structured, and unstructured data means data professionals need to be proficient with various data formats such as ORC, Parquet, Avro, CSV, and Apache Iceberg tables, to cover the ever growing spectrum of datasets – be they images, videos, sensor data, or other type of media content.

Let's personalize your content