Revolutionizing Real-Time Streaming Processing: 4 Trillion Events Daily at LinkedIn

LinkedIn Engineering

OCTOBER 19, 2023

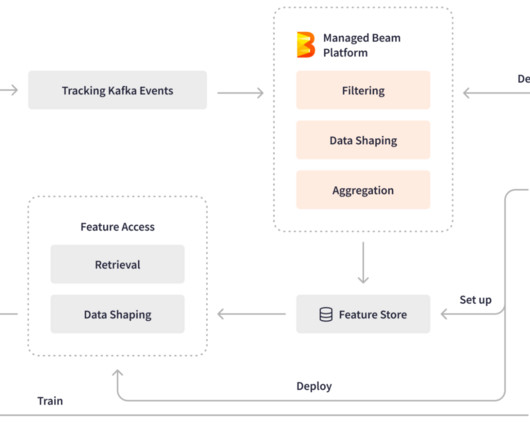

In 2010, they introduced Apache Kafka , a pivotal Big Data ingestion backbone for LinkedIn’s real-time infrastructure. To transition from batch-oriented processing and respond to Kafka events within minutes or seconds, they built an in-house distributed event streaming framework, Apache Samza.

Let's personalize your content