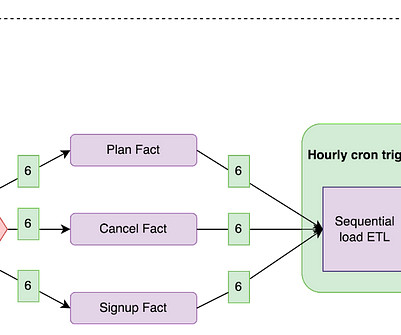

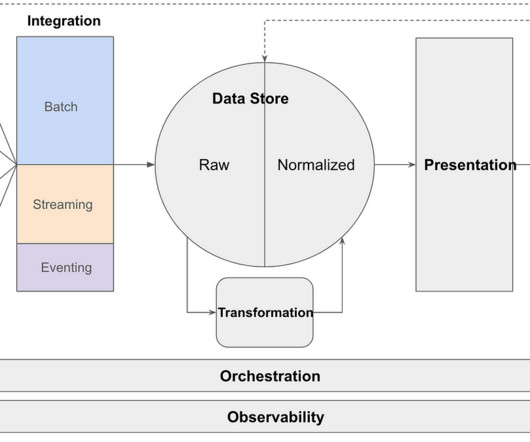

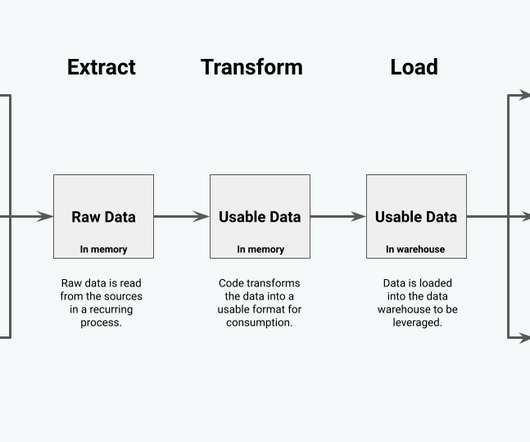

5 Helpful Extract & Load Practices for High-Quality Raw Data

Meltano

DECEMBER 7, 2022

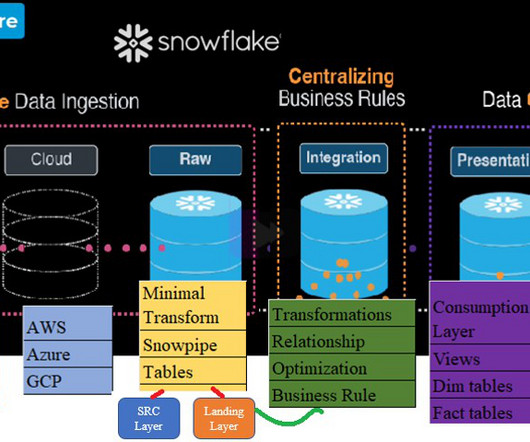

Setting the Stage: We need E&L practices, because “copying raw data” is more complex than it sounds. “Raw data” sounds clear. Every time you change systems, you will need to modify the “raw data” to adhere to the rules of the new system.

Let's personalize your content